Concurrency Control and Prioritization

The following policy is based on the Concurrency Scheduling blueprint.

Overview

Concurrency control and prioritization, is a sophisticated technique that allows effective management of concurrent requests. With this technique, services can limit the number of concurrent API calls to alleviate the load on the system.

When service limits are reached, Aperture Cloud can queue incoming requests and serve them according to their priority, which is determined by business-critical labels set in the policy and passed via the SDK.

The diagram provides an overview of concurrency scheduling in action, where the scheduler queues and prioritizes requests once the service limit is hit and keeps the count with a token counter.

Requests coming into the system are categorized into different workloads, each of which is defined by its priority and weight. This classification is crucial for the request scheduling process.

The scheduler priorities request admission based the priority and weight assigned to the corresponding workload. This mechanism ensures that high-priority requests are handled appropriately even under high load.

Before exploring Aperture's concurrency scheduling capabilities, make sure that you have signed up to Aperture Cloud and set up an organization. For more information on how to sign up, follow our step-by-step guide.

Concurrency Scheduling with Aperture SDK

The first step to using the Aperture SDK is to import and set up Aperture Client:

- TypeScript

import { ApertureClient } from "@fluxninja/aperture-js";

// Create aperture client

export const apertureClient = new ApertureClient({

address: "ORGANIZATION.app.fluxninja.com:443",

apiKey: "API_KEY",

});

You can obtain your organization address and API Key within the Aperture Cloud

UI by clicking the Aperture tab in the sidebar menu.

The next step consists of setting up essential business labels to prioritize requests. For example, requests can be prioritized by user tier classifications:

- Typescript

const userTiers = {

"platinum": 8,

"gold": 4,

"silver": 2,

"free": 1,

};

The next step is making a startFlow call to Aperture. For this call, it is

important to specify the control point (concurrency-scheduling-feature in our

example) and the labels that will align with the concurrency scheduling policy.

The priority label is necessary for request prioritization, while the

workload label differentiates each request.

According to the policy logic designed to prevent service limit breaches,

Aperture will, on each startFlow call, either give precedence to a critical

request or queue a less urgent one when approaching API limits. The duration a

request remains in the queue is determined by the gRPC deadline, set within the

startFlow call. Setting this deadline to 120000 milliseconds, for example,

indicates that the request can be queued for a maximum of 2 minutes. After this

interval, the request will be rejected.

In this example, we're only tracking and logging requests sent to Aperture.

However, once the startFlow call is made, business logic can be executed

directly, as excess requests are queued, eliminating the need to check if a flow

shouldRun.

- TypeScript

const flow = await apertureClient.startFlow(

"concurrency-scheduling-feature",

{

labels: {

user_id: "some_user_id",

priority: priority.toString(),

workload: tier,

},

grpcCallOptions: {

deadline: Date.now() + 120000, // ms

},

},

);

if (flow.shouldRun()) {

console.log(`[${tier} Tier] Request accepted with priority ${priority}.`);

// sleep for 5 seconds to simulate a long-running request

await new Promise((resolve) => setTimeout(resolve, 5000));

} else {

console.log(`[${tier} Tier] Request rejected. Priority was ${priority}.`);

}

flow.end();

It is important to make the end call after processing each request, to remove

in-flight requests and send telemetry data that would provide granular

visibility for each flow.

Create a Concurrency Scheduling Policy

- Aperture Cloud UI

- aperturectl

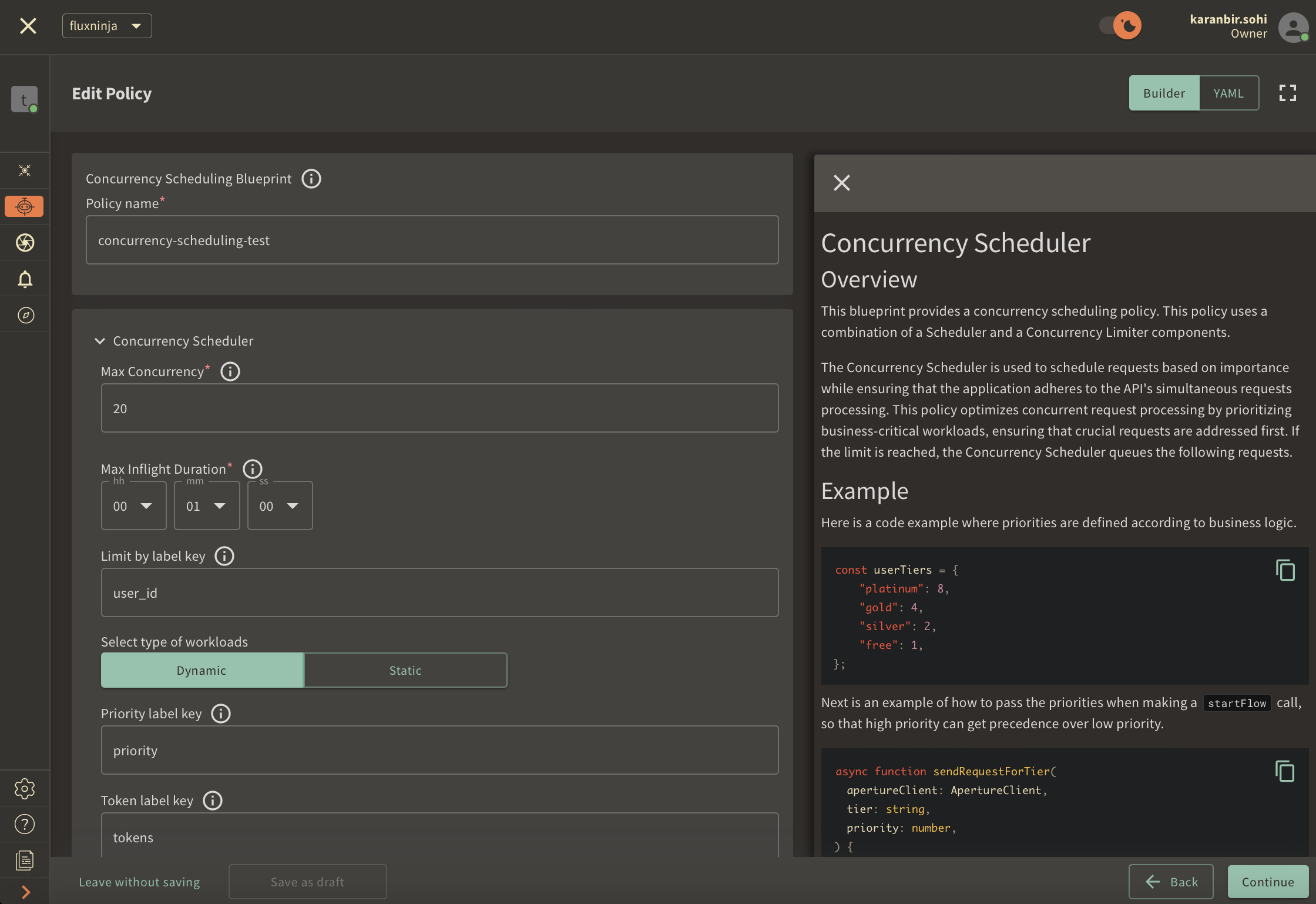

Navigate to the Policies tab on the sidebar menu, and select Create Policy

in the upper-right corner. Next, choose the Request Prioritization blueprint,

and from the drop-down options select Concurrency based. Now, complete the form

with these specific values:

Policy Name: Unique for each policy, this field can be used to define policies tailored for different use cases. Set the policy name toconcurrency-scheduling-test.Limit by label key: Determines the specific label key used for concurrency limits. We'll useuser_idas an example.Max inflight duration: Configures the time duration after which flow is assumed to have ended in case the end call gets missed. We'll set it to60sas an example.Max concurrency: Configures the maximum number of concurrent requests that a service can take. We'll set it to20as an example.Priority label key: This field specifies the label that is used to determine the priority. We will leave the label as it is.Tokens label key: This field specifies the label that is used to determine tokens. We will leave the label as it is.Workload label key: This field specifies the label that is used to determine the workload. We will leave the label as it is.Control point: It can be a particular feature or execution block within a service. We'll useconcurrency-scheduling-featureas an example.

Once you've completed these fields, click Continue and then Apply Policy to

finalize the policy setup.

If you haven't installed aperturectl yet, begin by following the Set up CLI aperturectl guide. Once aperturectl is installed, generate the values file necessary for creating the concurrency scheduling policy using the command below:

aperturectl blueprints values --name=concurrency-scheduling/base --output-file=concurrency-scheduling-test.yaml

Following are the fields that need to be filled for creating a concurrency scheduling policy:

policy_name: Unique for each policy, this field can be used to define policies tailored for different use cases. Set the policy name toconcurrency-scheduling-test.limit_by_label_key: Determines the specific label key used for concurrency limits. We'll useuser_idas an example.max_inflight_duration: Configures the time duration after which flow is assumed to have ended in case the end call gets missed. We'll set it to60sas an example.max_concurrency: Configures the maximum number of concurrent requests that a service can take. We'll set it to20as an example.priority_label_key: This field specifies the label that is used to determine the priority. We will leave the label as it is.tokens_label_key: This field specifies the label that is used to determine tokens. We will leave the label as it is.workload_label_key: This field specifies the label that is used to determine the workload. We will leave the label as it is.control_point: It can be a particular feature or execution block within a service. We'll useconcurrency-scheduling-featureas an example.

Here is how the complete values file would look:

# yaml-language-server: $schema=../../../../../blueprints/concurrency-scheduling/base/gen/definitions.json

blueprint: concurrency-scheduling/base

uri: ../../../../../blueprints

policy:

policy_name: "concurrency-scheduling-test"

components: []

concurrency_scheduler:

alerter:

alert_name: "Too many inflight requests"

concurrency_limiter:

limit_by_label_key: "user_id"

max_inflight_duration: "60s"

max_concurrency: 20

scheduler:

priority_label_key: "priority"

tokens_label_key: "tokens"

workload_label_key: "workload"

selectors:

- control_point: "concurrency-scheduling-feature"

resources:

flow_control:

classifiers: []

The last step is to apply the policy using the following command:

aperturectl cloud blueprints apply --values-file=concurrency-scheduling-test.yaml

Next, we'll proceed to run an example to observe the newly implemented policy in action.

Concurrency Scheduling in Action

Begin by cloning the

Aperture JS SDK. Look for the

concurrency_scheduler_example.ts in the example directory within the SDK.

Switch to the example directory and follow these steps to run the example:

- Install the necessary packages:

- Run

npm installto install the base dependencies. - Run

npm install @fluxninja/aperture-jsto install the Aperture SDK.

- Run

- Run

npx tscto compile the TypeScript example. - Run

node dist/concurrency_scheduler_example.jsto start the compiled example.

Once the example is running, it will prompt you for your Organization address and API Key. In the Aperture Cloud UI, select the Aperture tab from the sidebar menu. Copy and enter both your Organization address and API Key to establish a connection between the SDK and Aperture Cloud.

Monitoring Concurrency Scheduling Policy

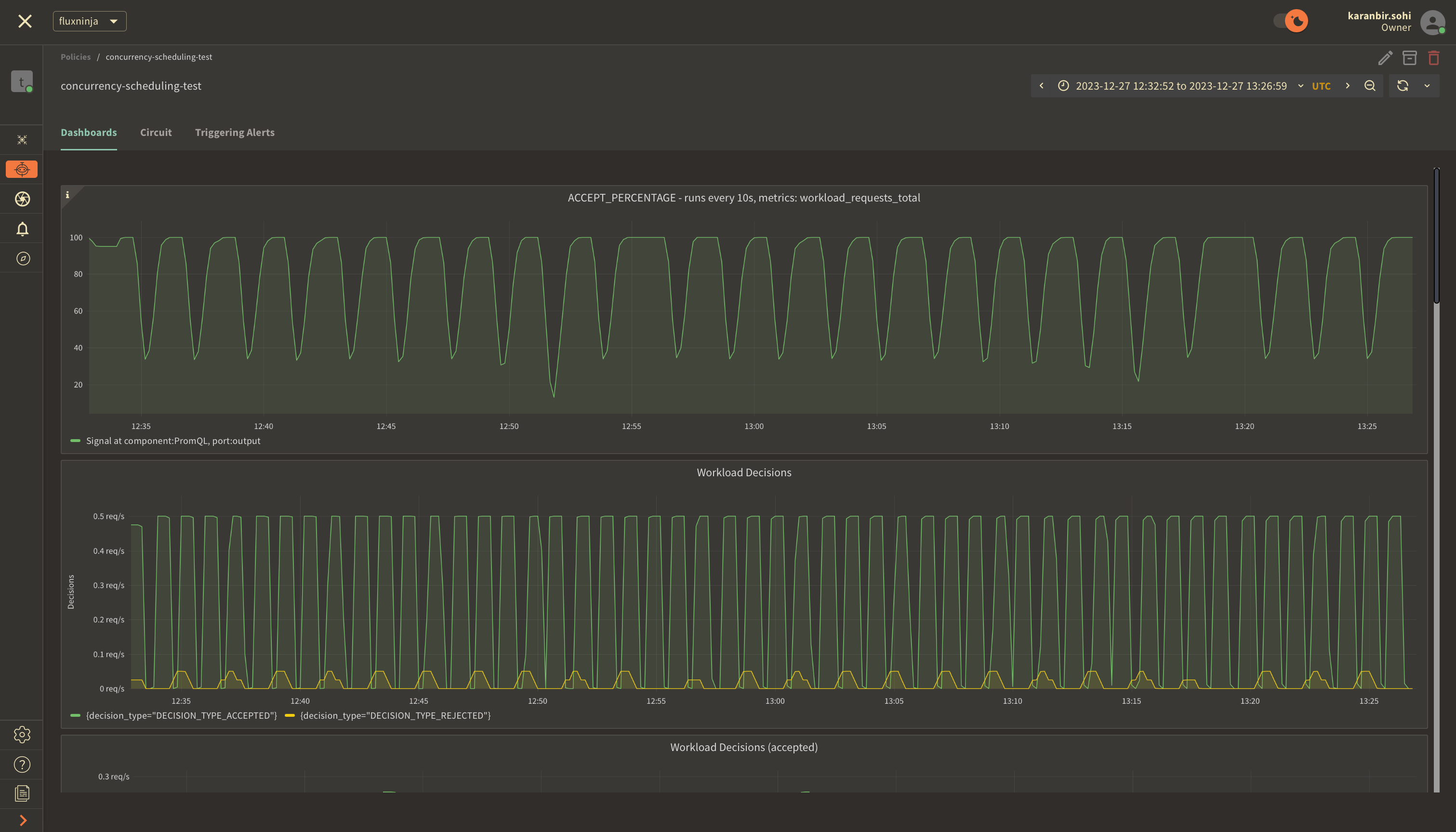

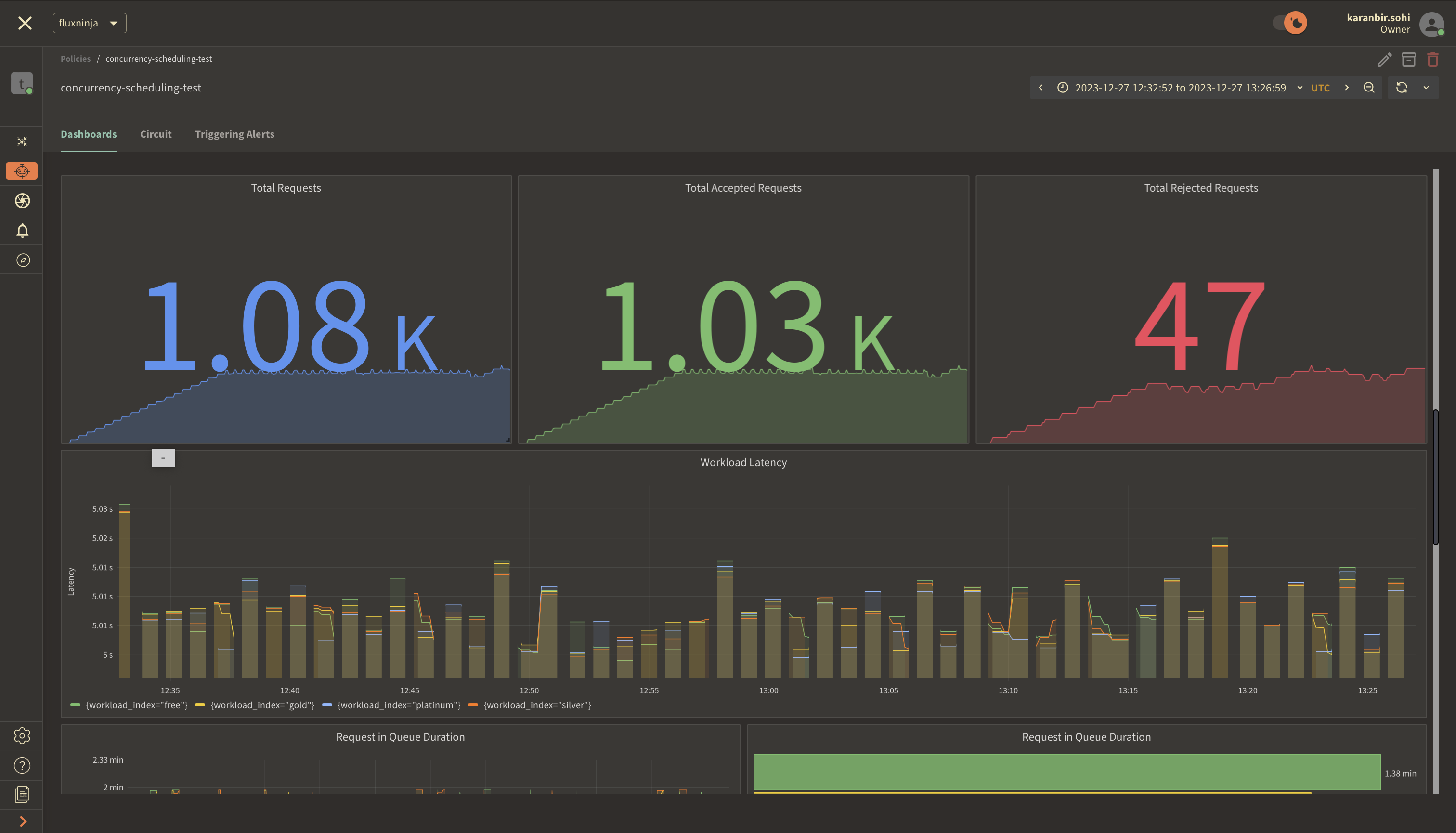

After running the example for a few minutes, you can review the telemetry data

in the Aperture Cloud UI. Navigate to the Aperture Cloud UI, and click the

Policies tab located in the sidebar menu. Then, select the

concurrency-scheduling-test policy that you previously created.

Once you've clicked on the policy, you will see the following dashboard:

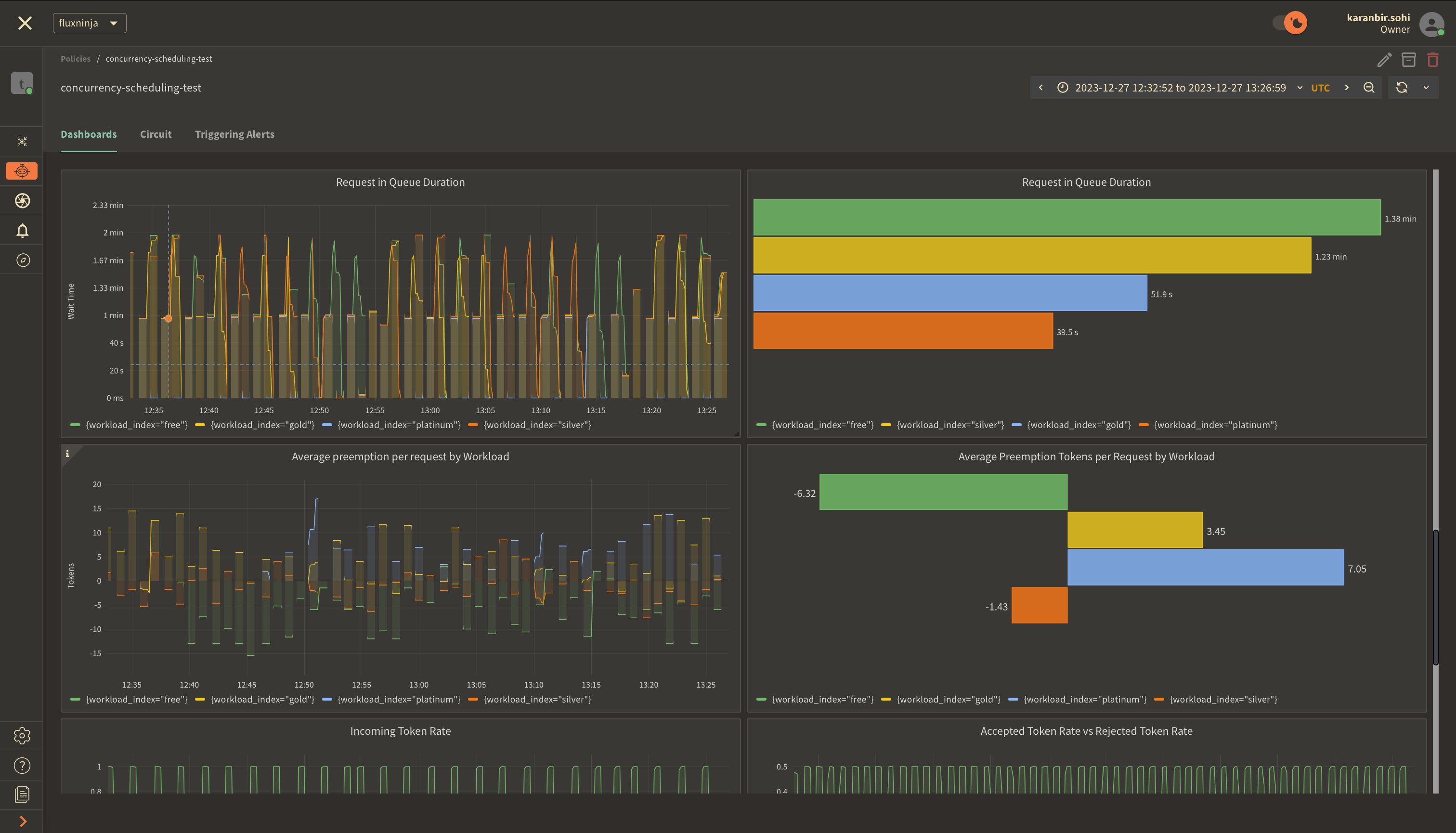

The two panels above provide insights into how the policy is performing by monitoring the number of accepted and rejected requests along with the acceptance percentage.

The panels above offer insights into the request details, including their latency.

These panels display insights into queue duration for workload requests and

highlight the average of prioritized requests that moved ahead in the queue.

Preemption for each token is measured as the average number of tokens a request

belonging to a specific workload gets preempted in the queue.