This is a guest post by CodeRabbit, a startup that uses OpenAI's API to provide AI-driven code reviews for GitHub and GitLab repositories.

Since CodeRabbit launched a couple of months ago, it has received an enthusiastic response and hundreds of sign-ups. CodeRabbit has been installed in over 1300 GitHub organizations and typically reviews more than 2000 pull requests per day. Furthermore, the usage continues to grow at a rapid pace, we are experiencing a healthy week-over-week growth.

While this rapid growth is encouraging, we've encountered challenges with

OpenAI's stringent rate limits, particularly for the newer gpt-4 model that

powers CodeRabbit. In this blog post, we will delve into the details of OpenAI

rate limits and explain how we leveraged the

FluxNinja's Aperture load management platform to

ensure a reliable experience as we continue to grow our user base.

Understanding OpenAI rate limits

OpenAI imposes fine-grained rate limits on both requests per minute and tokens per minute for each AI model they offer. In our account, for example, we are allocated the following rate limits:

| Model | Tokens per minute | Requests per minute |

|---|---|---|

| gpt-3.5-turbo | 1000000 | 12000 |

| gpt-3.5-turbo-16k | 180000 | 3500 |

| gpt-4 | 40000 | 200 |

We believe that the rate limits are in place for several reasons and are unlikely to change in the near future:

- Advanced models such as

gpt-4are computationally intensive. Each request can take several seconds or even minutes to process. For example, 30s response time is fairly typical for complex tasks. OpenAI sets these limits to manage aggregate load on their infrastructure and provide fair access to users. - The demand for AI has outstripped the supply of available hardware, particularly the GPUs required to run these models. It will take some time for the industry to meet this exploding demand.

CodeRabbit's OpenAI usage pattern and challenges

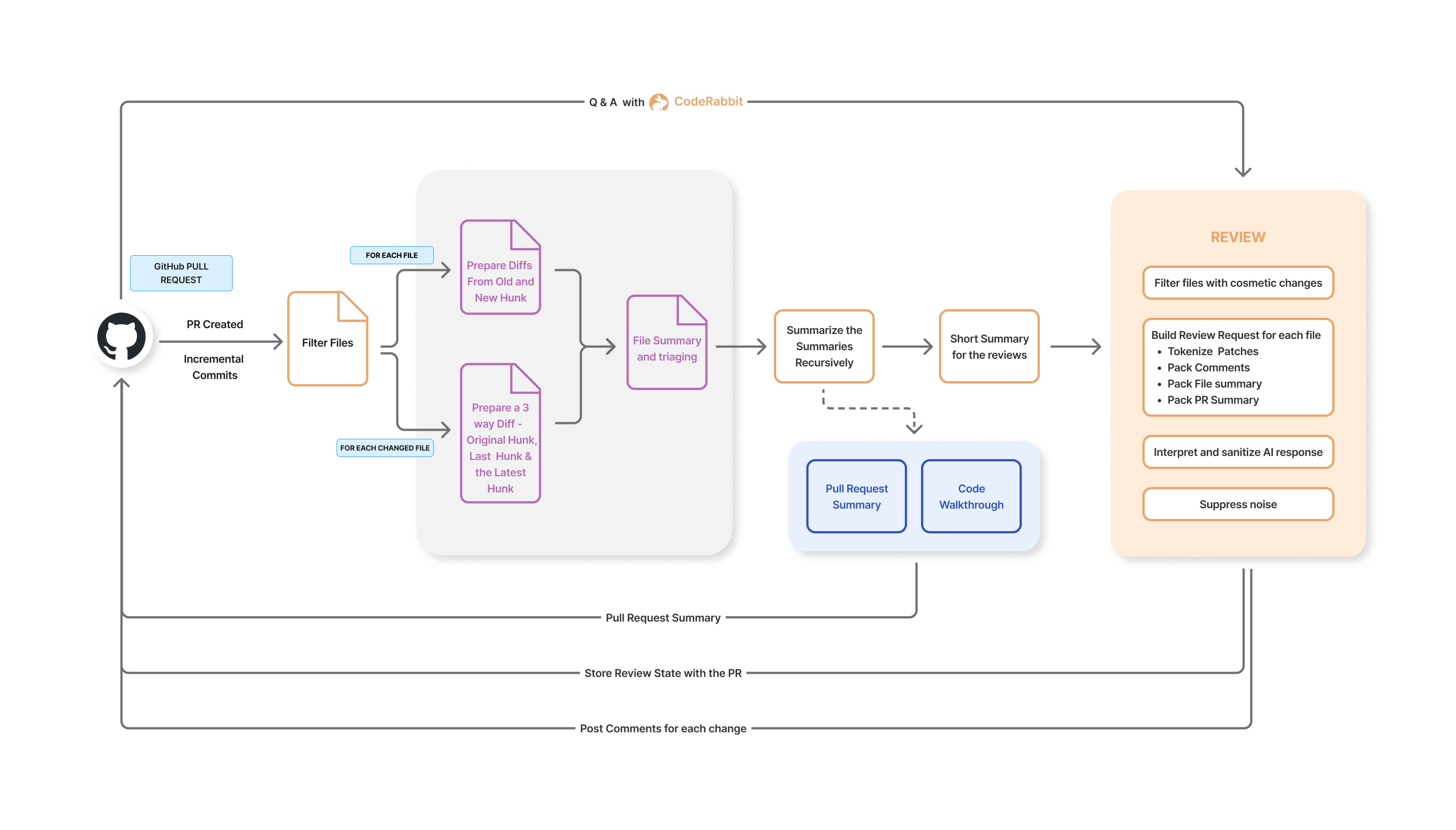

CodeRabbit is an AI-driven code review application that integrates with GitHub or GitLab repositories. It analyzes pull requests and provides feedback to the developers on the quality of their code. The feedback is provided in the form of comments on the pull request, allowing the developers to enhance the code based on the provided suggestions in the follow-up commits.

CodeRabbit Pull Request Review Workflow

CodeRabbit Pull Request Review Workflow

CodeRabbit employs a combination of the gpt-3.5-turbo and gpt-4 family of

models. For simpler tasks such as summarization, we use the more economical

gpt-3.5-turbo family of models, whereas intricate tasks such as in-depth code

reviews are performed by the slow and expensive gpt-4 family of models.

Our usage pattern is such that each file in a

pull request is summarized and reviewed concurrently.

During peak hours or when dealing with large pull requests (consisting of 50+

files), we began to encounter 429 Too Many Requests errors from OpenAI. Even

though, we had a retry and back-off mechanism, many requests were still timing

out after multiple attempts. Our repeated requests to OpenAI to increase our

rate limits were met with no success.

To mitigate these challenges, we cobbled together a makeshift solution for our API client:

- Set up four separate OpenAI accounts to distribute the load.

- Implemented an API concurrency limit on each reviewer instance to cap the number of in-flight requests to OpenAI.

- Increased the back-off time for each retry and increased the number of retries. OpenAI's rate limit headers were not helpful in determining the optimal back-off times, as the headers were outdated by tens of seconds and do not consider the in-flight requests.

- Transitioned from Google Cloud Functions to Google Cloud Run to ensure that instances would not be terminated due to execution time limits while requests were in the retry-back-off loop.

Although these adjustments provided temporary relief, the challenges resurfaced as the load increased within a few days. We were doing much guesswork to figure out the "right" number of concurrent requests, back-off times, max retries and so on.

Complicating matters further, we added a chat feature that allows users to consult the CodeRabbit bot for code generation and technical advice. While we aimed for real-time responses, the back-off mechanisms made reply time unpredictable, particularly during peak usage, thereby degrading the user experience.

We needed a better solution, one that could globally manage the rate limits across all reviewer instances and prioritize requests based on user tiers and the nature of requests.

FluxNinja Aperture to the rescue

We were introduced to the FluxNinja Aperture load management platform by one of our advisors. Aperture is an open source load management platform that offers advanced rate-limiting, request prioritization, and quota management features. Essentially, Aperture serves as a global token bucket, facilitating client-side rate limits and business-attribute-based request prioritization.

Implementing the Aperture TypeScript SDK in our reviewer service

Our reviewer service runs on Google Cloud Run, while the Aperture Agents are

deployed on a separate Kubernetes cluster (GKE). To integrate with the Aperture

Agents, we employ Aperture's TypeScript SDK. Before calling OpenAI, we rely on

Aperture Agent to gate the request using the StartFlow method. To provide more

context to Aperture, we also attach the following labels to each request:

model_variant: This specifies the model variant being used (gpt-4,gpt-3.5-turbo, orgpt-3.5-turbo-16k). Requests and tokens per minute rate limit policies are set individually for each model variant.api_key- This is a cryptographic hash of the OpenAI API key, and rate limits are enforced on a per-key basis.estimated_tokens: As the tokens per minute quota limit is enforced based on the estimated tokens for the completion request, we need to provide this number for each request to Aperture for metering. Following OpenAI's guidance, we calculateestimated_tokensas(character_count / 4) + max_tokens. Note that OpenAI's rate limiter doesn't tokenize the request using the model's specific tokenizer but relies on a character count-based heuristic.product_tier: CodeRabbit offers bothproandfreetiers. Theprotier provides comprehensive code reviews, whereas thefreetier offers only the summary of the pull request.product_reason: We also label why a review was initiated under theprotier. For example, the reasoning could that the user is apaid_user,trial_useror aopen_source_user. Requests to OpenAI are prioritized based on these labels.priority: Requests are ranked according to a priority number provided in this label. For example, requests frompaid_userare given precedence over those fromtrial_userandopen_source_user. The base priority is incremented for each file reviewed, enabling pull requests that are further along in the review process to complete more quickly than newly submitted ones. Additionally, chat messages are assigned a much higher priority compared to review tasks.

Request Flow between CodeRabbit, Aperture Agent(s) and OpenAI

Integration with Aperture TypeScript SDK

Policy configuration in Aperture: Aligning with OpenAI's rate limits

Aperture offers a foundational "blueprint" for managing quotas, consisting of two main components:

- Rate limiter: OpenAI employs a token bucket algorithm to impose rate limits,

and that is directly compatible with Aperture's rate limiter. For example, in

the tokens per minute policy for

gpt-4, we have allocated a burst capacity of40000 tokens, and a refill rate of40000 tokens per minute. The bucket begins to refill the moment the tokens are withdrawn, aligning with OpenAI's rate-limiting mechanism. This ensures our outbound request and token rate remains synchronized with OpenAI's enforced limits. - Scheduler: Aperture has a weighted fair queuing scheduler that prioritizes the requests based on multiple factors such as the number of tokens, priority levels and workload labels.

By fine-tuning these two components in Aperture, we can go as fast as we can, with optimal user experience, while ensuring that we don't exceed the rate limits.

Client-side quota management policies for gpt-4

Reaping the benefits

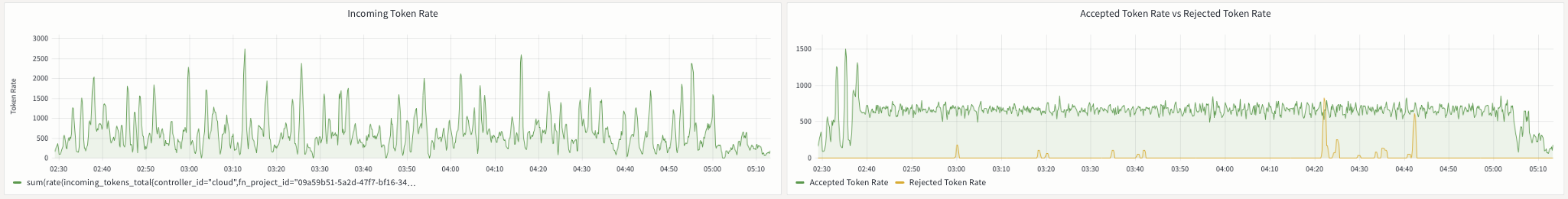

During peak hours, we typically process tens of pull requests, hundreds of

files, and chat messages concurrently. The image below shows the incoming token

rate and the accepted token rate for the gpt-4 tokens-per-minute policy. We

can observe that the incoming token rate is spiky, while the accepted token rate

remains smooth and hovers around 666 tokens per second. This roughly

translates to 40,000 tokens per minute. Essentially, Aperture is smoothing out

the fluctuating incoming token rate to align it with OpenAI's rate limits.

Incoming and Accepted Token Rate for gpt-4

Incoming and Accepted Token Rate for gpt-4

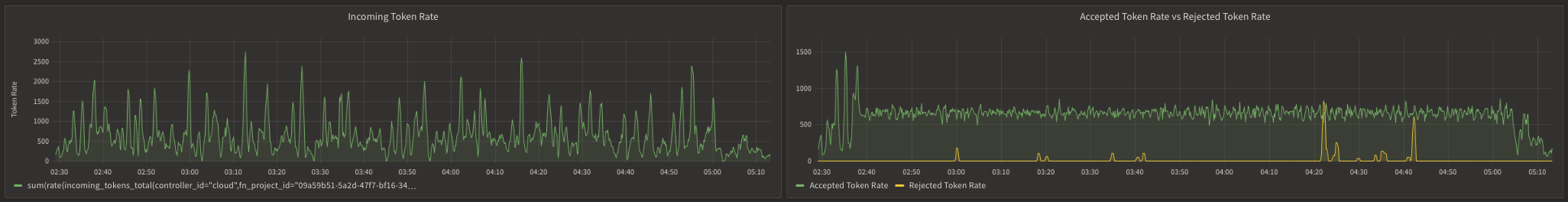

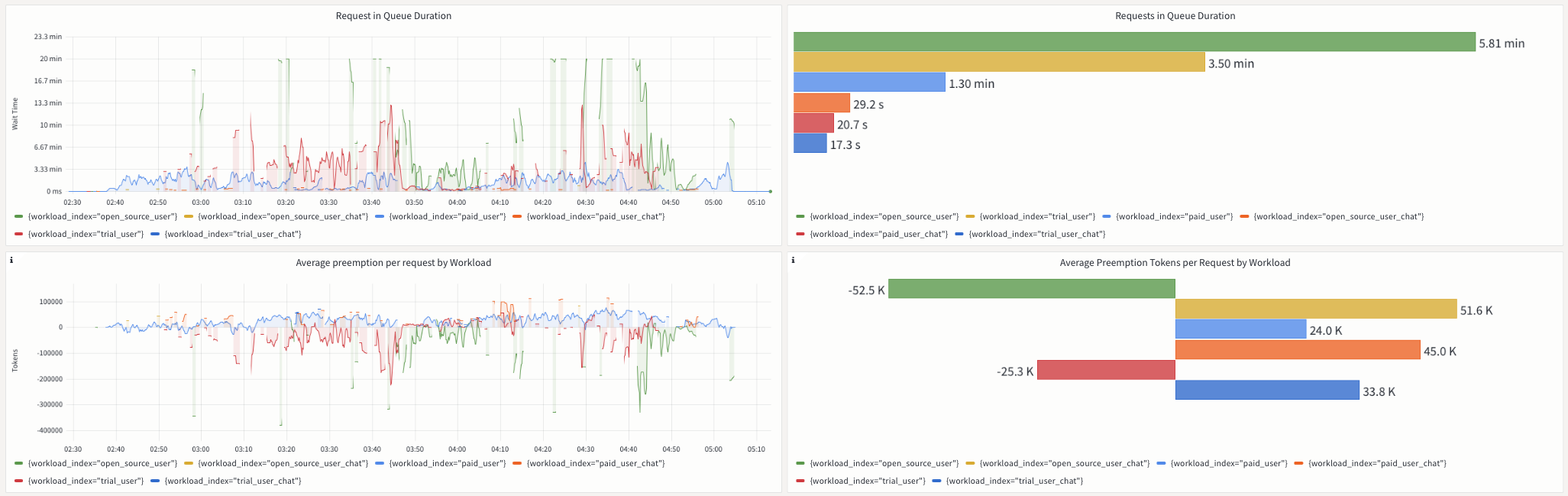

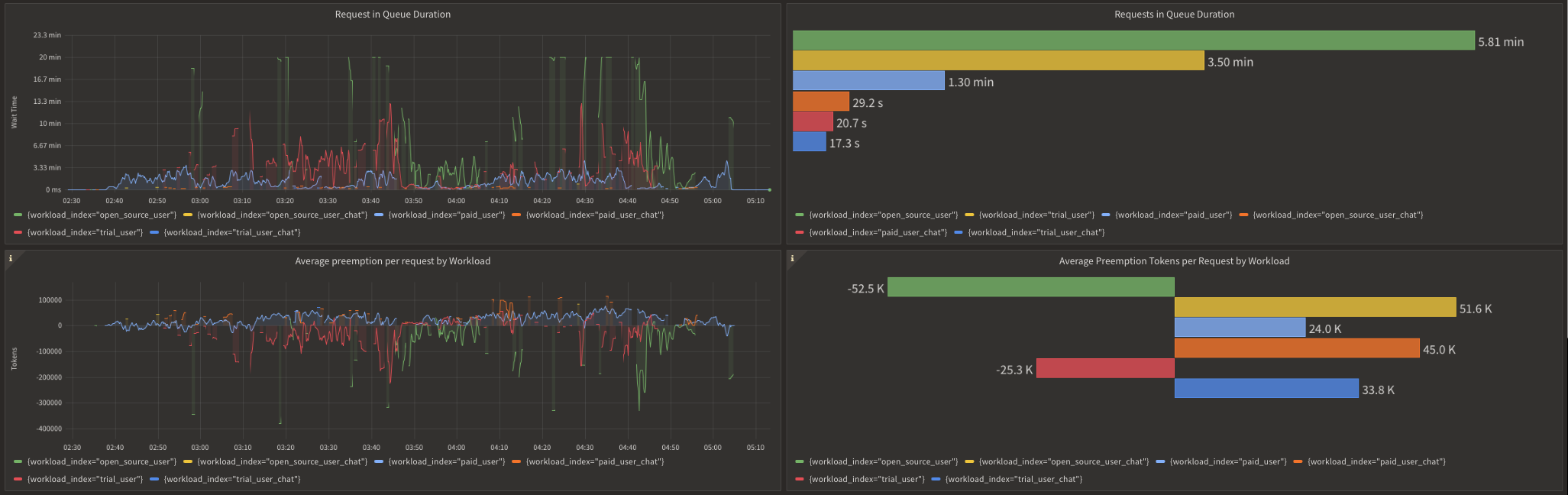

The below image shows request prioritization metrics from the Aperture Cloud console during the same peak load period:

Prioritization Metrics for gpt-4

Prioritization Metrics for gpt-4

In the upper left panel of the metrics, noticeable peaks indicate that some requests got queued for several minutes in Aperture. We can verify that the trial and free-tier users tend to experience longer queue times compared to their paid counterparts and chat requests.

Queue wait times can fluctuate based on the volume of simultaneous requests in each workload. For example, wait times are significantly longer during peak hours as compared to off-peak hours. Aperture provides scheduler preemption metrics to offer further insight into the efficacy of prioritization. As observed in the lower panels, these metrics measure the relative impact of prioritization for each workload by comparing how many tokens a request gets preempted or delayed in the queue compared to a purely First-In, First-Out (FIFO) ordering.

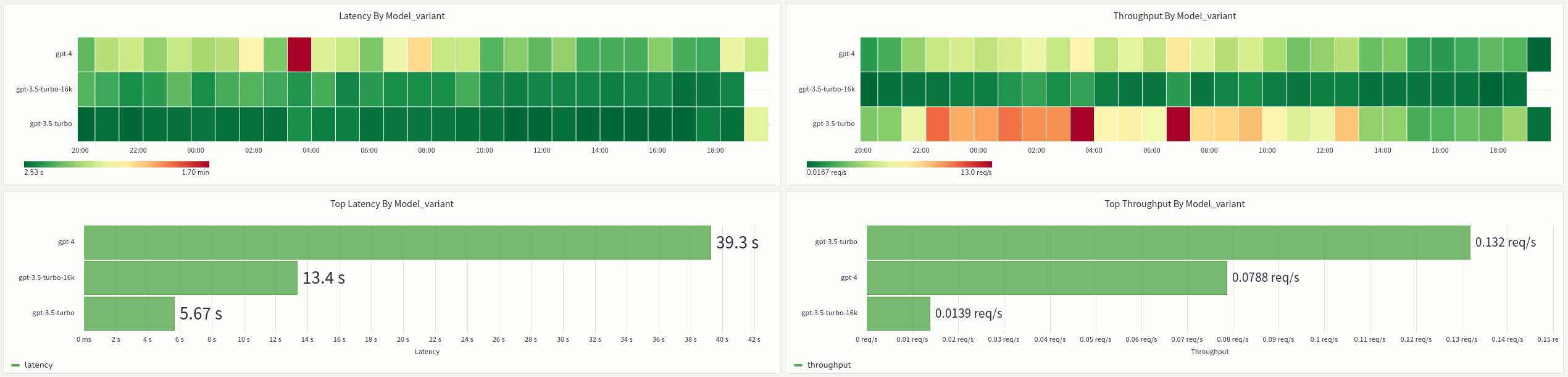

In addition to effectively managing the OpenAI quotas, Aperture provides

insights into OpenAI API performance and errors. The graphs below show the

overall response times for various OpenAI models we use. We observe that the

gpt-4 family of models is significantly slower compared to the gpt-3.5-turbo

family of models. This is quite insightful, as it hints at why OpenAI's

infrastructure struggles to meet demand - these APIs are not just simple

database or analytics queries; they are computationally expensive to run.

Performance Metrics for OpenAI Models

Performance Metrics for OpenAI Models

Conclusion

Aperture has been a game-changer for CodeRabbit. It has enabled us to continue signing up users and growing our usage without worrying about OpenAI rate limits. Without Aperture, our business would have hit a wall and resorted to a wait-list approach, which would have undermined our traction. Moreover, Aperture has provided us with valuable insights into OpenAI API performance and errors, helping us monitor and improve the overall user experience.

In the realm of generative AI, we are dealing with a fundamentally different nature of API dynamics. Performance wise, these APIs are an order of magnitude slower than traditional APIs. We believe that request scheduling and prioritization will become a critical component of the AI infrastructure stack, and with Aperture, FluxNinja is well positioned to be the leader in this space. As CodeRabbit continues to build and add additional components such as vector databases, which are also computationally expensive, we are confident that Aperture will continue to help us offer a reliable experience to our users.