Service Mesh technologies such as Istio have brought great advancements to the Cloud Native industry with load management and security features. However, despite all these advancements, Applications running on Service Mesh technologies are unable to prioritize critical traffic during peak load times. Therefore, we still have real-world problems such as back-end services getting overloaded because of excess load, leading to a degraded user experience. While it is close to impossible to predict when a sudden increase in load will happen, it is possible to bring a crucial and essential feature in Service Meshes to handle unexpected scenarios: Observability-driven load management.

In this blog post, we will discuss the benefits of Istio and how FluxNinja Aperture can elevate Istio to be a robust service mesh technology that can handle any unexpected scenario by leveraging advanced load management capabilities.

What are Istio Base Capabilities?

Istio capabilities are centered around three pillars:

- Security:

- This includes basic encryption between services, mutual TLS between services, and basic access control, which makes use cases such as micro-segmentation possible, ensuring that the services are talking to each other intent-fully. Additionally, handling Authentication, Authorization, and audit logging.

- Traffic Management:

- This encompasses fundamental load balancing algorithms, network settings like timeouts and retry configurations for each service subset, passive checks such as outlier detection and circuit-breaking, as well as CI/CD-focused features like Canary Deployments and A/B Testing.

- Observability:

- Istio includes detailed telemetry, which comprises a combination of metrics, distributed tracing, and logging. This combination is powerful, providing a holistic view of the system. It allows you to monitor how services are performing based on fine-grained metrics and golden signals, track the flow of traffic through Access Logs, and observe how services communicate with each other.

What does Istio need?

When it comes to service mesh and keeping multiple service-to-service communication, Istio is the go-to technology. However, the gaps in managing unexpected scenarios such as sudden traffic surges or bottlenecks in the database by too many connections make it fragile, especially where there are multiple factors at play.

Rate Limiting & Resource Fairness: Rate limiting is often the first line of defense against API abuse, For example, if a user inundates your service with an excessive number of repeated requests in a short time frame, it becomes essential to put a stop to this behavior. The base Istio, by default, lacks rate limits, particularly for services that are shared among or accessed by various entities. Implementing rate limits ensures fairness and prevents any specific group of users from monopolizing resources.

It acts as a protective layer that facilitates the fair distribution of resources, thereby avoiding unnecessary resource wastage.

Preventing Cascading Failures: As services are susceptible to overloads, it's crucial to implement mechanisms that prevent cascading failures. While traditional solutions aim to block requests, the modern landscape demands the consistent maintenance of high service throughput, even under intense stress, to ensure uninterrupted performance. Businesses cannot afford complete service downtime. For example, when a database experiences a heavy load, the service should gracefully handle the load and prioritize requests based on business criticality, rather than dropping all requests or risking a database crash.

Managing Third-party Dependencies: In the era of API/Service as a Product, it is uncommon for an application to operate in isolation. It frequently relies on third-party systems, APIs, or managed services like DynamoDB or OpenAI. This dependence gives rise to various challenges, including the following:

Staying Within Limits: Just like any community, the service provider expects its consumers to adhere to certain norms. Overstepping API rate limits, for example, can lead to penalties. GitHub, for example, might penalize users who frequently exceed their allocated quota. Therefore, being a 'good digital citizen' involves respecting these bounds.

Effective Quota Utilization: Every application has its priorities. Some requests hold more business value than others. When approaching these third-party limits, it becomes necessary to have a strategy in place that prioritizes essential requests. This not only ensures vital functions aren't hindered, but also maximizes the chances of critical requests being processed. For example, if you're using OpenAI to generate text, you might want to prioritize requests that are more time-sensitive, such as those that are part of a user-facing application. This ensures that the user experience isn't compromised.

In case you're using the same API key across multiple workloads, you need to ensure that you don't exceed the quota. This requires a well-defined strategy for when to retry, particularly when you're approaching the threshold, without any guesswork.

Request Prioritization: Recognizing that not all requests are equal and prioritizing high-importance requests based on time sensitivity and business criticality becomes one of the challenges in distributed systems.

Flow Control in Istio with Aperture

Aperture is an open-source platform designed explicitly for observability-driven flow control. Seamlessly integrating with your existing stack—be it a service mesh, API gateway, or language SDKs—It is programmable through a declarative policy language, which is expressed as a control circuit graph.

Here's what Aperture brings to Istio:

- Distributed Rate Limiting: It is different from Istio; we can think of it

as Istio service rate limiting but using the Istio

AuthzAPI, which gives you rate-limiting capabilities to limit users in a more distributed fashion and in a scalable way. - Adaptive Limits & Request Prioritization: This capability builds upon circuit-based policy implementation, allowing you to take any signals from your infrastructure, which measures the bottleneck, like queue size, thread counts, latency, or any signal that indicates overload buildup. This ensures that any indication of system overload triggers immediate adaptive rate limiting as close to the entry point as possible and reduces wasted work.

- Quota Limits & Request Prioritization: This feature helps when working with a third-party service or even among different teams. Teams or 3P often place quotas (which we express in RPS or QPS) to ensure that one team or consumer of the API doesn't excessively and burden a particular service. These quota limits can apply either within services within a larger organization or with third-party services such as DynamoDB, GitHub, or OpenAI. Additionally, having a set quota limit adds another layer of control. In traditional way, to manage optimal quota utilization, you would have to do guesswork and retry requests when you're approaching the limit.

- Telemetry: Unlike the basic telemetry offered by Istio, this provides a more surgical because it's tied to the Rego-based classification rules; you can even go deep into your payload to extract fine-grained traffic labels and even get latency histograms, allowing for percentile-based evaluations.

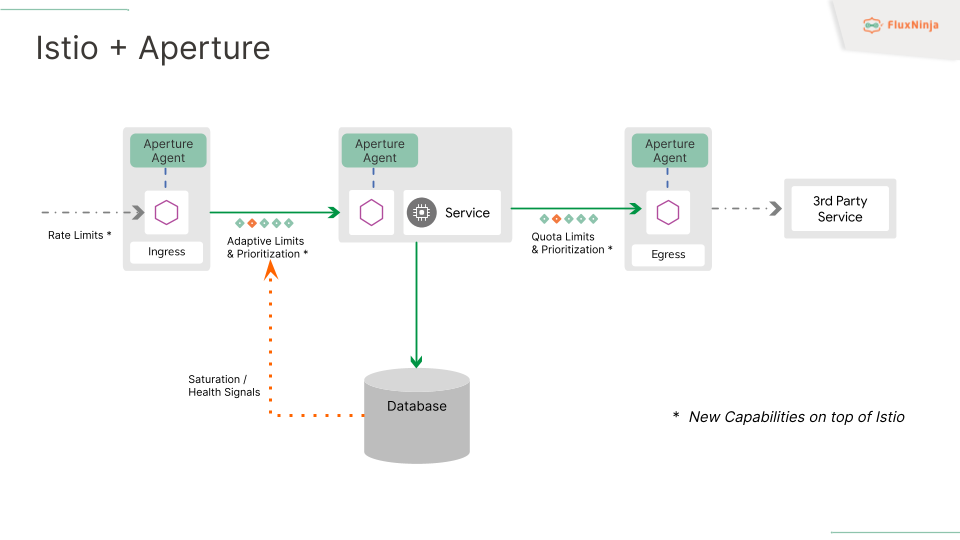

How do these capabilities fit in Istio?

The Aperture Agent sits next to the Envoy proxy, which acts like an additional sidecar. It integrates via an Envoy Authorization API, which lets us intercept and manage incoming calls. That being said, Aperture can be installed at various vantage points where the envoy proxy or the Istio proxy is installed—

- At the ingress point, the Aperture Agent can implement features like rate limiting for incoming calls. So, any call that goes into the envoy gets forwarded to the Aperture agent, which then applies the rate limits.

- Within services, the Aperture Agent offers more advanced features, like adaptive rate limits & prioritization. These are based on saturation signals taken from bottleneck services, typically databases in a distributed application. By doing this, the Aperture Agent can dynamically control the traffic rate entering your service and prioritize it accordingly.

- At the egress point when dealing with external service calls, for example, calls going to a third party like OpenAI. The Agent helps you model these external rate limits internally using token buckets. This enables you to stay within those external limits and prioritize high-importance requests to use the available quota more effectively.

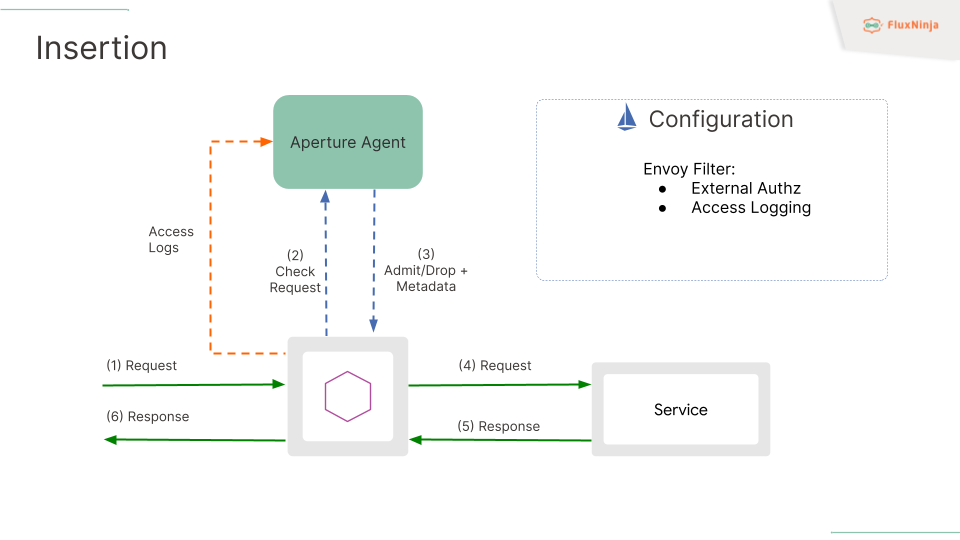

Aperture Insertion in Istio

The insertion is done using Envoy Filter CRD, which defines External Authz, Access logging interceptors, letting Envoy know where the Aperture Agent is running, and does selective filtering of where to apply what, fundamentally limiting the scope to specific workloads with workloads selectors.

The Authz API, which was implemented by the OPA team for Authz use cases, is used for Aperture for flow control capabilities. So, any request that comes to Envoy Proxy gets forwarded to the Agent for yes or no to answer, either to admit or drop and add additional metadata while returning the response. In the case of no, the envoy proxy won’t forward the request to service and respond back with a 503 status code or the code status which in Aperture Policy. The default is 503; for rate limiting, it is 403.

Another integration that happened with Envoy is Access logs, which is used for telemetry, which helps in estimating tokens doing things dynamically and completes the feedback loop for Aperture.

Aperture in Action

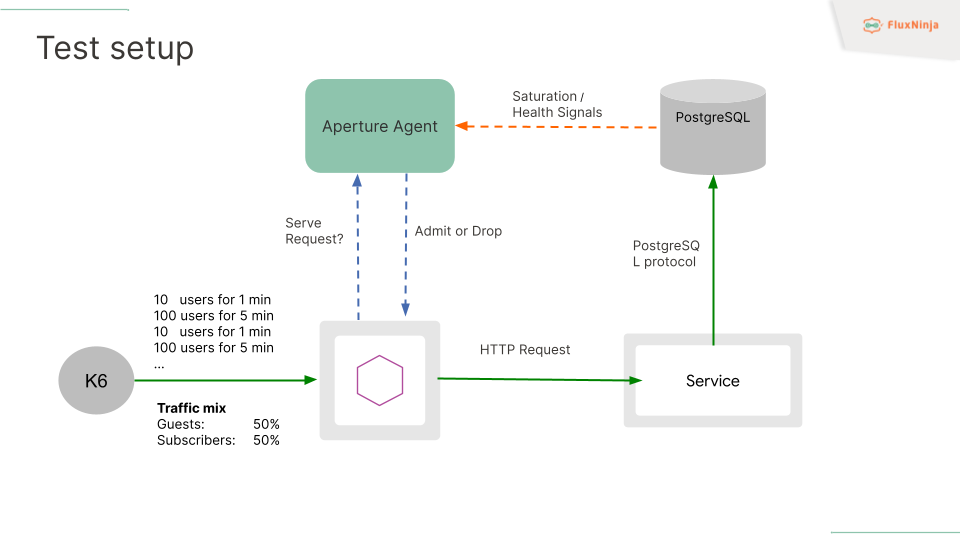

The playground setup includes a PostgreSQL database and a specialized HTTP service. Whenever an incoming HTTP request is detected, this service forwards a query to the PostgreSQL database. The entire system is interconnected through Istio, which simplifies complex communications between services & applications.

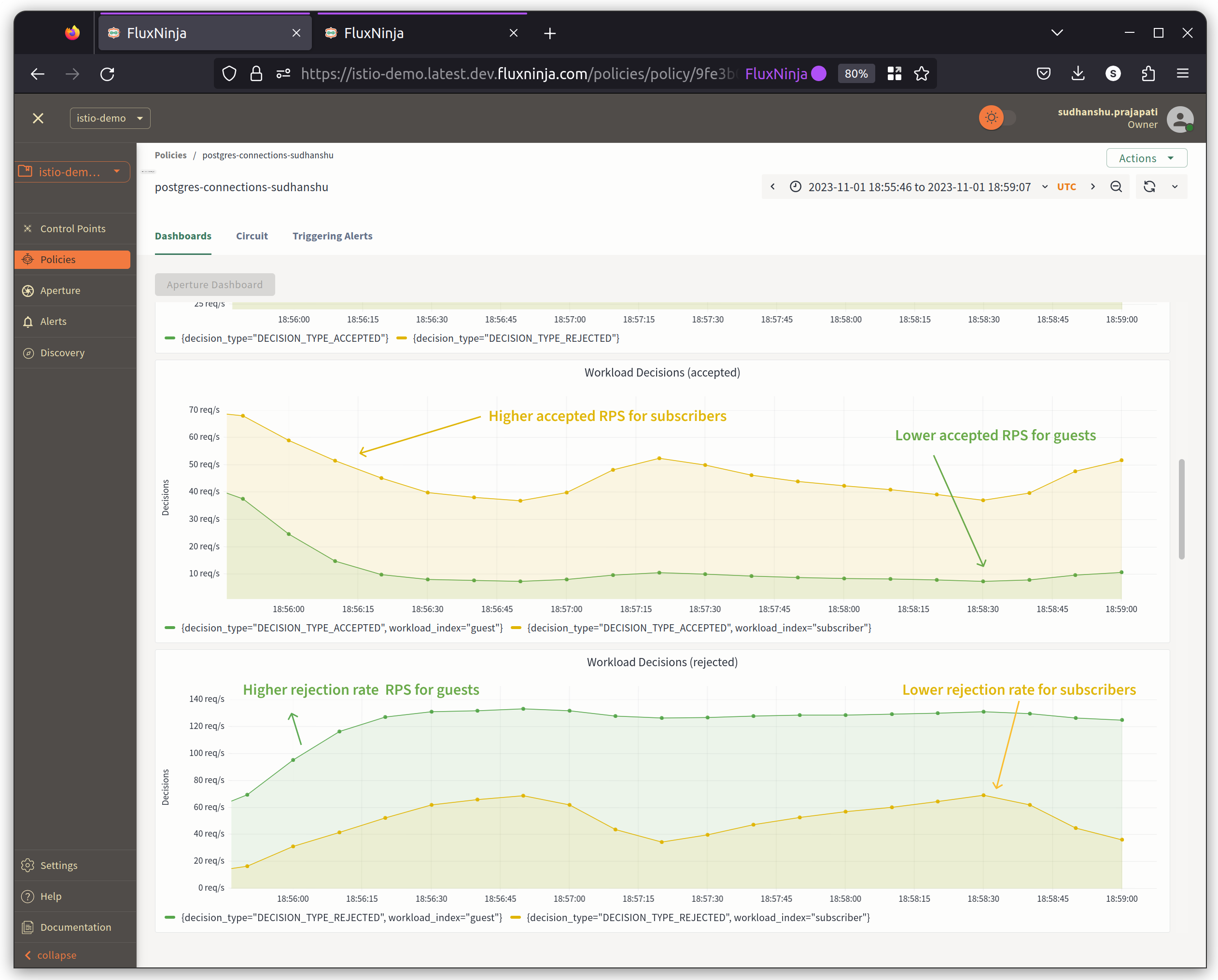

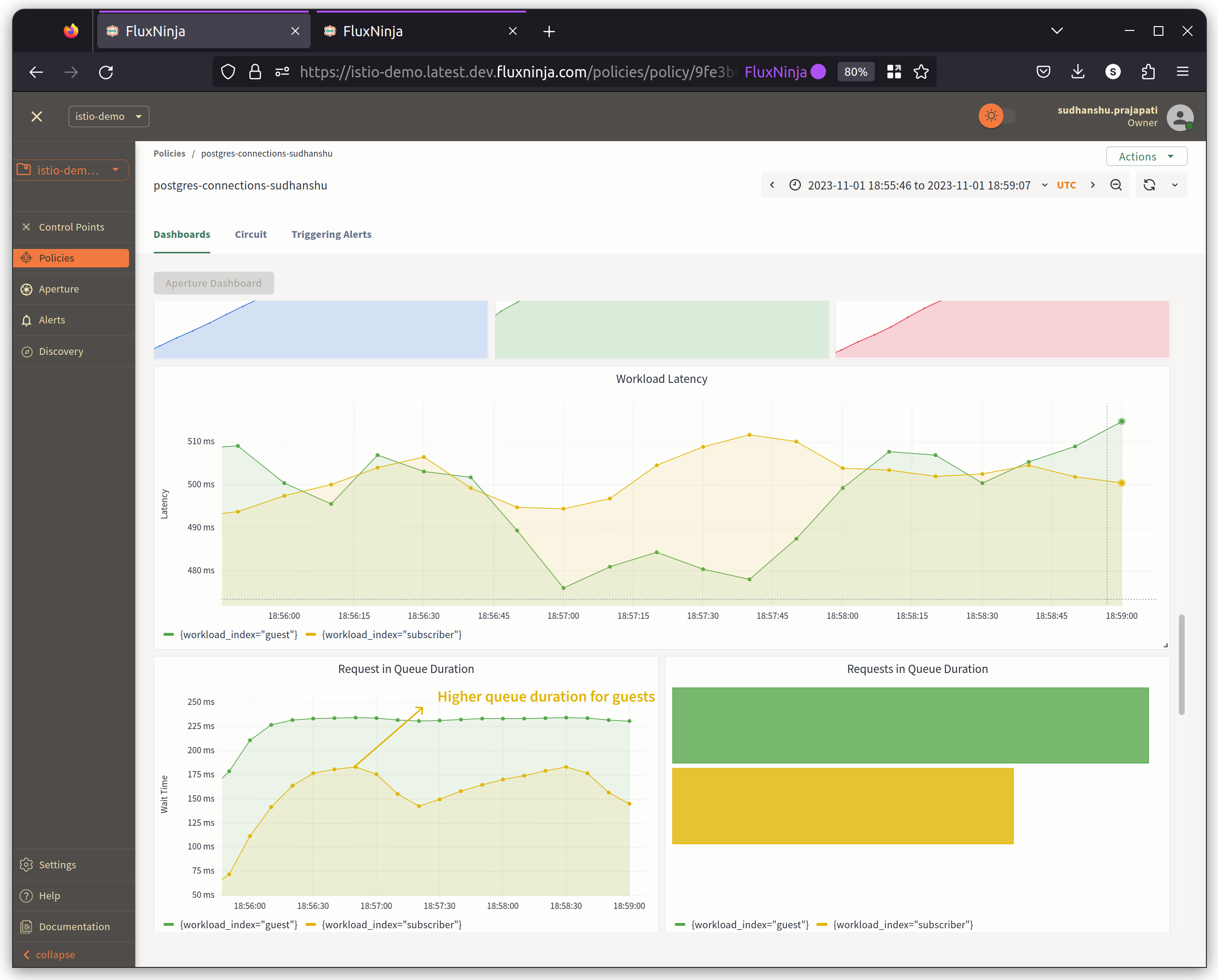

We're also utilizing a project based on k6, which is designed to simulate user behavior and traffic. K6 sends requests to our HTTP service, which then passes through the Envoy proxy. The users in our tests are categorized into two types: guests and subscribers. Subscribers are given higher priority, and the traffic distribution between the two is evenly split at 50% each.

In terms of traffic patterns, it emulates both sine and square waveforms. The test starts with a load of 10 users for the first minute and escalates to 100 users thereafter. When the user count reaches 100, our service makes numerous API calls to PostgreSQL. This leads to a rapid filling of PostgreSQL's queue.

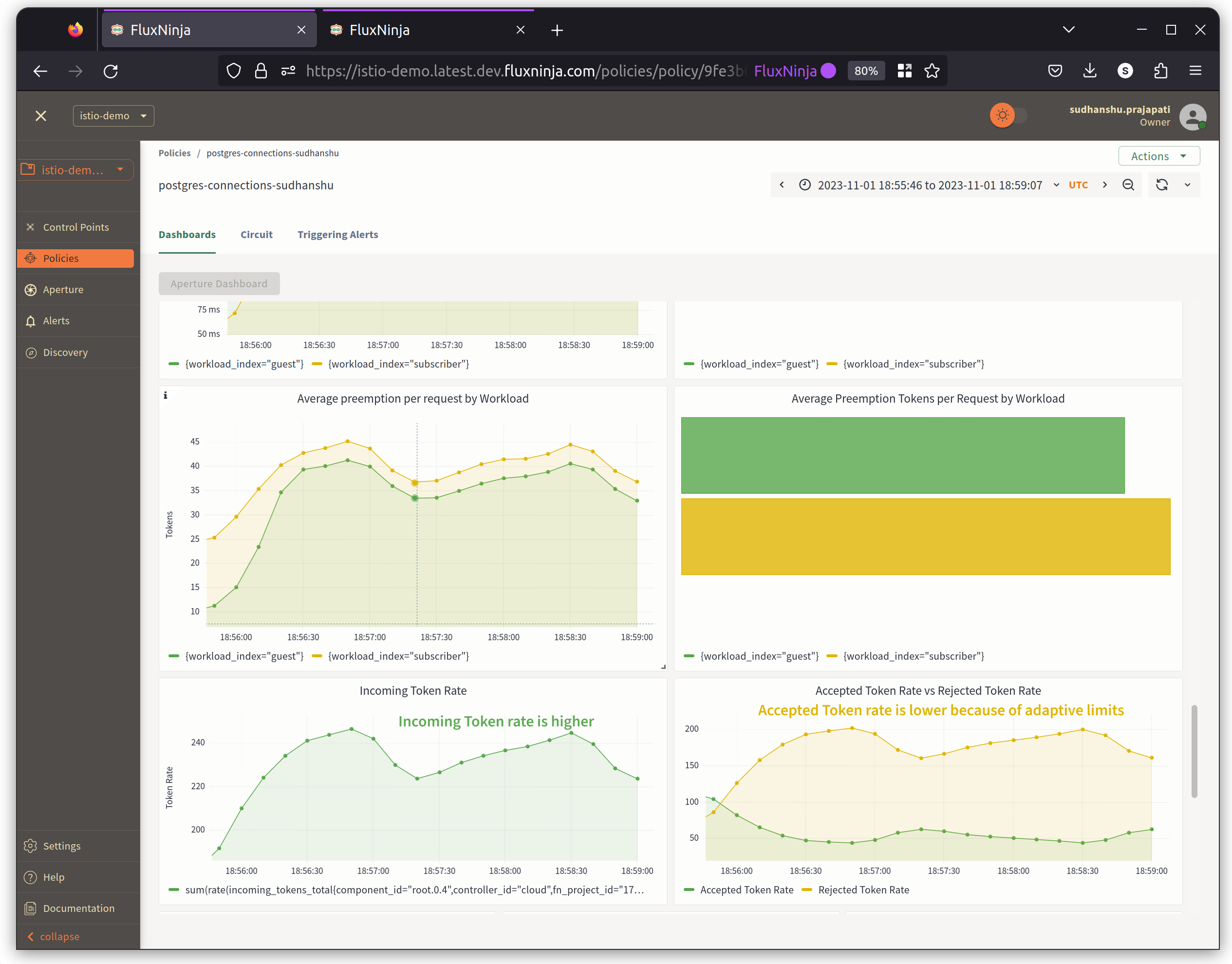

To manage this, we monitor the number of active connections to PostgreSQL. If this number approaches 100%, it indicates that a queue is building up, resulting in latency spikes affecting all users. To mitigate this, we have implemented monitoring through an Aperture Agent.

This Agent is equipped with an OpenTelemetry (OTel) pipeline that scrapes metrics from PostgreSQL. These metrics feed into an algorithm designed for dynamic throttling of incoming API requests. This way, we can maintain service quality by ensuring that PostgreSQL's connection load stays manageable and prioritizing subscriber traffic.

Policy

In the policy, we define an OTel collector configuration for scraping PostgreSQL

metrics in the PostgreSQL section. We consider overload when 40% of PostgreSQL

connections are used. When that happens, we decrease the load multiplier by 20%

every 10 seconds. During recovery, we increase the load multiplier by 5% every

10 seconds. Priorities are defined for both user types based on the header value

of user-type.

blueprint: load-scheduling/postgresql

policy:

policy_name: postgres-connections

postgresql:

agent_group: default

endpoint: postgresql.postgresql.svc.cluster.local:5432

username: postgres

password: secretpassword

databases:

- "postgres"

collection_interval: 10s

tls:

insecure: true

service_protection_core:

setpoint: 40

aiad_load_scheduler:

load_multiplier_linear_decrement: 0.2

load_multiplier_linear_increment: 0.05

overload_condition: gt

load_scheduler:

selectors:

- agent_group: default

control_point: ingress

service: service1-demo-app.demoapp.svc.cluster.local

scheduler:

workloads:

- label_matcher:

match_labels:

request.http.headers.user-type: "guest"

parameters:

priority: 50.0

name: "guest"

- label_matcher:

match_labels:

http.request.header.user_type: "subscriber"

parameters:

priority: 250.0

name: "subscriber"

Aperture Policy in Action

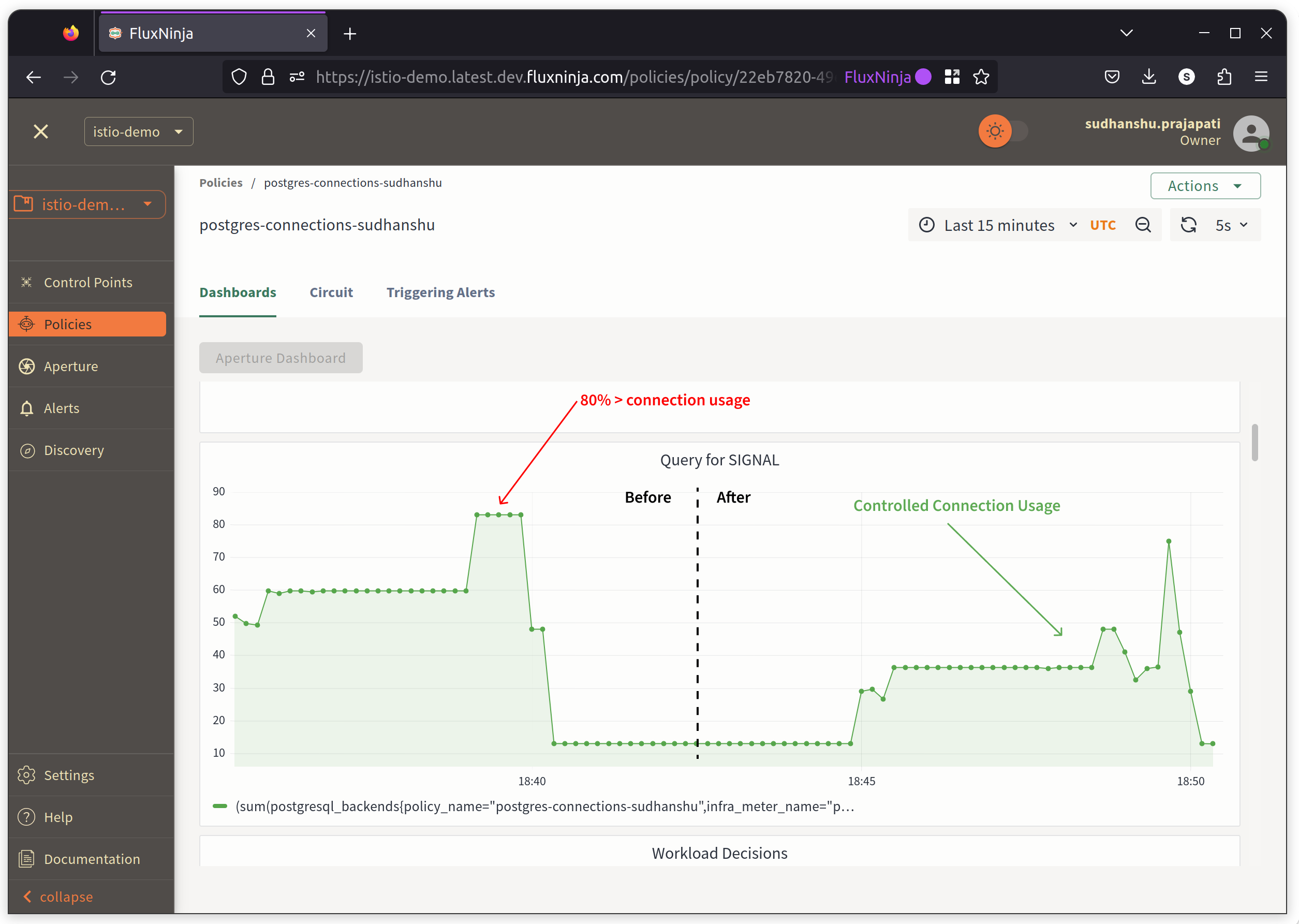

In the graph below, we can observe how the policy is functioning. Before the policy takes action, there's a clear indication of an error from the PostgreSQL Client, which suggests "too many clients" when it approaches a count of 100. This implies that there might be a queue buildup on the PostgreSQL side. When the policy comes into effect, it aims to hover usage at the defined limit, which is 40%.

Also, the accepted workload RPS for Subscriber is higher almost 5x compared to guest.

Requests are getting scheduled, waiting in queue for time if necessary.

Conclusion

While Istio provides a solid service mesh foundation, it lacks sophisticated flow control capabilities. This leaves gaps around preventing cascading failures, managing third-party dependencies, and prioritizing critical requests with a more proactive approach.

FluxNinja Aperture fills these gaps with its observability-driven approach to flow control. It integrates seamlessly with Istio, enabling advanced traffic management like adaptive rate limiting, advanced throttling, quota management, and request prioritization.

Together, Istio and Aperture give enterprises the control they need to build resilient, scalable production systems.

Try out Aperture Cloud today with your own Istio mesh and experience observability-driven flow control capabilities.