In today's world, the internet is the most widely used technology. Everyone, from individuals to products, seeks to establish a strong online presence. This has led to a significant increase in users accessing various online services, resulting in a surge of traffic to websites and web applications.

Because of this surge in user traffic, companies now prioritize estimating the number of potential users when launching new products or websites, due to capacity constraints which lead to website downtime, for example, after the announcement of ChatGPT 3.5, there was a massive influx of traffic and interest from people all around the world. In such situations, it is essential to have load management in place to avoid possible business loss.

As businesses grow and expand, it becomes increasingly important to ensure that their web applications and websites can handle the influx of traffic and demand without sacrificing performance or reliability. Even big organizations are still suffering from these downtime issues, which highlights the need for an intelligent load management platform.

That's where FluxNinja Aperture comes in. In this blog post, we'll explore how Aperture can help manage high traffic on e-commerce websites by integrating with Nginx Gateway. With Aperture's advanced load management techniques, such as adaptive service protection, practitioners can ensure the reliability and stability of their web applications, even during periods of high traffic.

The Challenge: Managing High-Traffic E-commerce Website with Nginx

- Unpredictable traffic spikes: During holidays or special events, traffic spikes can put a strain on the website leading to slow response times, server crashes, or downtime.

- Limited resources: Often, websites only have limited resources to handle traffic spikes which can lead to a lack of scalability and can make it challenging to provide a consistent user experience.

- Dynamic traffic patterns: Throughout the year, website are affected by unpredictable traffic patterns. Managing this dynamic traffic can be challenging, especially during peak periods.

- Performance and reliability: Maintaining a high level of performance and reliability is the key for providing optimal user experience. Slow response times, errors, or downtime can lead to lost revenue and damage to the brand's reputation.

If these challenges are not managed effectively, they can lead to significant consequences such as downtime, lost revenue, and a negative impact on the brand's reputation.

To overcome these challenges effectively, let's examine a concrete setup that can be implemented.

Solving the Load Management Challenge

Now, let's explore how FluxNinja Aperture resolves the above listed challenges.

FluxNinja Aperture is a load management platform that integrates with Nginx Gateway to provide advanced load management techniques for effectively managing the challenges associated with high and unpredictable traffic. Aperture helps solving load management challenges with the following features:

- Service Load Management: Dynamically adjusts the number of requests that can be made to a service based on its health. It can also prioritize requests based on their importance, ensuring that critical requests are processed first.

- API Quota Management: Optimizes external API requests by managing quotas across multiple services preventing overages.

- Rate Limiting: Prevents abuse and protects the service from excessive requests.

To configure one of the strategies in use with Nginx Gateway and Aperture, let's explore the steps involved.

Integrating Aperture with Nginx Gateway

Before jumping into the integration of Aperture with Nginx, the assumption is that Aperture is already installed, if not, please visit Aperture installation and then get started with the Flow Control Gateway Integration.

For an overview Nginx Server,

lua-nginx-module has to be

enabled, and LuaRocks has to be configured. In case of

blockers, kindly checkout the documentation mentioned above.

To Integrate Aperture with Nginx Gateway, these are the high-level steps that needs to be performed:

Install the Aperture Lua module: The

opentelemetry-luaSDK has to be installed beforehand to install the Aperture Lua module. Visit the Nginx Integration doc for detailed steps.Configure Nginx: This involves adding certain blocks of code to the Nginx configuration file to initialize and execute the Aperture Lua module. Below is an example of these blocks:

http {

...

init_by_lua_block {

access = require "aperture-plugin.access"

log = require "aperture-plugin.log"

}

access_by_lua_block {

local authorized_status = access(ngx.var.destination_hostname, ngx.var.destination_port)

if authorized_status ~= ngx.HTTP_OK then

return ngx.exit(authorized_status)

end

}

log_by_lua_block {

log()

}

server {

listen 80;

proxy_http_version 1.1;

location /service1 {

set $destination_hostname "service1-demo-app.demoapp.svc.cluster.local";

set $destination_port "80";

proxy_pass http://$destination_hostname:$destination_port/request;

}

...

}

...

}init_by_lua_blockinitializes the moduleaccess_by_lua_blockexecutes the Aperture check for all servers and locations before the request is forwarded upstream.log_by_lua_blockforwards the OpenTelemetry logs to Aperture for all servers and locations after the response is received from upstream.

Additionally, the Aperture Lua module needs the upstream address of the server using

destination_hostnameanddestination_portvariables, which need to be set from the Nginx location block.Set environment variables:

APERTURE_AGENT_ENDPOINT- To connect to the Aperture Agent.APERTURE_CHECK_TIMEOUT- To specify the timeout for execution of the Aperture check.

Demo

In this demonstration, we will explore how rate-limiting escalation can assist an e-commerce website during unexpected high traffic. E-commerce websites typically have three types of users: crawlers, guests, and subscribed members. During periods of high traffic, the website may struggle to respond to each request without prioritization, leading to frustration among paying users.

To demonstrate this scenario, we have set up a playground environment with a Nginx server forming a topology, as depicted below. To see it in live action, you can run the playground on your local machine by referring to the instructions in the Try Local Playground guide.

This playground is a Kubernetes-based environment that includes the necessary components, such as the Aperture Controller and Agent, already installed in the cluster.

Traffic Generator

The playground also has a load generator named wavepool-generator, which will

help us mimic the high-traffic scenario for our use case.

👉 For your information, the load generator is configured to generate the

following traffic pattern forsubscriber, guest and crawler traffic types:

- Ramp up to

5concurrent users in10s. - Hold at

5concurrent users for2m. - Ramp up to

30concurrent users in1m(overloadsservice3). - Hold at

30concurrent users for2m(overloadsservice3). - Ramp down to

5concurrent users in10s. - Hold at

5concurrent users for2m.

Nginx Configuration

I’ve configured Nginx in the demo by assigning a URL to each service and defining their respective locations. The Nginx deployment and configuration file can be found in the Aperture repo Playground Resources.

Below is a snippet of the Nginx configuration file, which includes the

definition of worker processes, events, and the HTTP server. The server block

defines the listening port and the proxy_pass directive, which is used to pass

the requests to the respective services.

worker_processes auto;

pid /run/nginx.pid;

events {

worker_connections 4096;

}

http {

default_type application/octet-stream;

resolver 10.96.0.10;

sendfile on;

keepalive_timeout 65;

init_by_lua_block {

access = require "aperture-plugin.access"

log = require "aperture-plugin.log"

}

access_by_lua_block {

local authorized_status = access(ngx.var.destination_hostname, ngx.var.destination_port)

if authorized_status ~= ngx.HTTP_OK then

return ngx.exit(authorized_status)

end

}

log_by_lua_block {

log()

}

server {

listen 80;

proxy_http_version 1.1;

location /service1 {

set $destination_hostname "service1-demo-app.demoapp.svc.cluster.local";

set $destination_port "80";

proxy_pass http://$destination_hostname:$destination_port/request;

}

location /service2 {

set $destination_hostname "service2-demo-app.demoapp.svc.cluster.local";

set $destination_port "80";

proxy_pass http://$destination_hostname:$destination_port/request;

}

location /service3 {

set $destination_hostname "service3-demo-app.demoapp.svc.cluster.local";

set $destination_port "80";

proxy_pass http://$destination_hostname:$destination_port/request;

}

}

}

Aperture Policy

Aperture includes a declarative policy that helps you customize how your system should react to a situation in a given policy; we have used a rate limiter and concurrency controller components.

You don’t need to worry about wiring stuff from scratch. Aperture follows a blueprint pattern, where you can use the existing blueprints to build upon it. For example, in this policy, we’re using Load Scheduling with Average Latency Feedback Blueprint and configuring to our needs.

Before delving in the explanation, follow the installation process a seamless generation of the policy described below This policy contains the following configurations:

flux_meter: This configuration specifies the Flux Meter that will be used to measure the flow of traffic for the service. It uses a flow selector that matches traffic for a specific service and control point.classifiers: This configuration specifies a list of classification rules that will be used to classify traffic. It includes a flow selector that matches traffic for a specific service and control point, and a rule that extracts a user_type value from the request headers.components: This configuration specifies a list of additional circuit components that will be used for this policy. It includes:decider: This component sets a signal to true for 30 seconds if the observed load multiplier is less than 1.0. This signal is used to determine whether the request is from a crawler or not.switcher: This component switches between two signals based on the value of the IS_BOT_ESCALATION signal that was set by the decider component. If the signal is true, the output signal is set to 0.0. If the signal is false, the output signal is set to 10.0.flow_control: This component applies rate limiting to traffic that matches a specific label. It uses a flow selector to match traffic for a specific service and control point based on the labelhttp.request.header.user_typewith valuecrawler.

load_scheduler: This configuration specifies the flow selector and scheduler parameters used to throttle request rates dynamically during high load. It uses the flow selector from the classifiers configuration and includes a scheduler that prioritizes traffic based on theuser_typelabel or thehttp.request.header.user_typeheader value. It also includes a load multiplier linear increment that is applied when the system is not in an overloaded state.

# yaml-language-server: $schema=../../../../blueprints/load-scheduling/average-latency/gen/definitions.json

# Generated values file for load-scheduling/average-latency blueprint

# Documentation/Reference for objects and parameters can be found at:

# https://docs.fluxninja.com/reference/blueprints/load-scheduling/average-latency

policy:

# Name of the policy.

# Type: string

# Required: True

policy_name: service1-demo-app

# List of additional circuit components.

# Type: []aperture.spec.v1.Component

components:

- decider:

in_ports:

lhs:

signal_name: DESIRED_LOAD_MULTIPLIER

rhs:

constant_signal:

value: 1.0

out_ports:

output:

signal_name: IS_CRAWLER_ESCALATION

operator: lt

true_for: 30s

- switcher:

in_ports:

switch:

signal_name: IS_CRAWLER_ESCALATION

on_signal:

constant_signal:

value: 0.0

off_signal:

constant_signal:

value: 10.0

out_ports:

output:

signal_name: RATE_LIMIT

- flow_control:

rate_limiter:

selectors:

- control_point: service1-demo-app

label_matcher:

match_labels:

"http.request.header.user_type": "crawler"

in_ports:

bucket_capacity:

signal_name: RATE_LIMIT

fill_amount:

signal_name: RATE_LIMIT

parameters:

label_key: http.request.header.user_id

interval: 1s

# Additional resources.

# Type: aperture.spec.v1.Resources

resources:

flow_control:

classifiers:

- selectors:

- control_point: service1-demo-app

service: nginx-server.demoapp.svc.cluster.local

label_matcher:

match_labels:

http.target: "/service1"

rules:

user_type:

extractor:

from: request.http.headers.user-type

service_protection_core:

adaptive_load_scheduler:

load_scheduler:

# The selectors determine the flows that are protected by this policy.

# Type: []aperture.spec.v1.Selector

# Required: True

selectors:

- control_point: service1-demo-app

service: nginx-server.demoapp.svc.cluster.local

label_matcher:

match_labels:

http.target: "/service1"

# Scheduler parameters.

# Type: aperture.spec.v1.SchedulerParameters

scheduler:

workloads:

- parameters:

priority: 50.0

label_matcher:

match_labels:

user_type: guest

name: guest

- parameters:

priority: 200.0

label_matcher:

match_labels:

http.request.header.user_type: subscriber

name: subscriber

# Linear increment to load multiplier in each execution tick (0.5s) when the system is not in overloaded state.

# Type: float64

load_multiplier_linear_increment: 0.025

latency_baseliner:

latency_tolerance_multiplier: 1.1

# Flux Meter defines the scope of latency measurements.

# Type: aperture.spec.v1.FluxMeter

# Required: True

flux_meter:

selectors:

- control_point: service3-demo-app

service: nginx-server.demoapp.svc.cluster.local

label_matcher:

match_labels:

http.target: "/service3"

Start the Playground

Once you understand the Nginx configuration and complete the playground prerequisites, run the following commands, and verify you’re within the cloned aperture repository.

# change directory to playground,

cd aperture/playground

tilt up -- --scenario=./scenarios/service-protection-rl-escalation-nginx

This will start all services and resources. Now, head over to Grafana at localhost:6000/

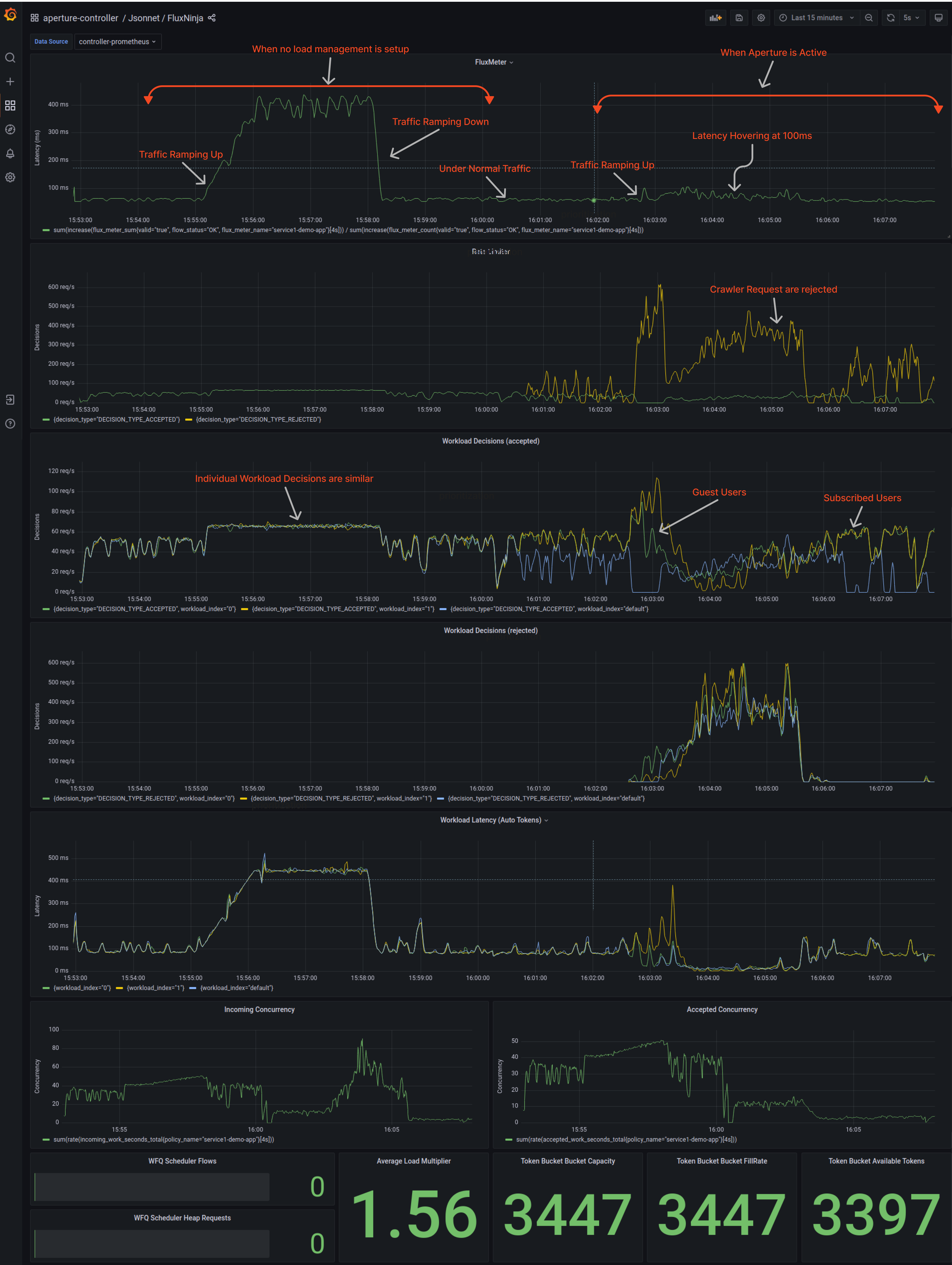

What are the consequences of not implementing load management?

In the snapshot of the Grafana dashboard below, you can see that the latency increases to 400ms as the number of users increases. If the number of users continues to increase, it can overload the service, leading to unrecoverable errors and cascading failure scenarios. Additionally, there is no prioritization between subscribed and guest users, and crawler traffic is allowed at all times, contributing to a rise in the overall latency of the service.

These are some of the key consequences:

- Increased latency and slower response times for users.

- Increased server load and potential overload, leading to unrecoverable errors and crashes.

- Cascading failure scenarios that can affect other parts of the system.

- No prioritization between different types of users, leading to poor user experience for some users.

- Allowing Crawler traffic at all times, contributing to a rise in overall latency and server load.

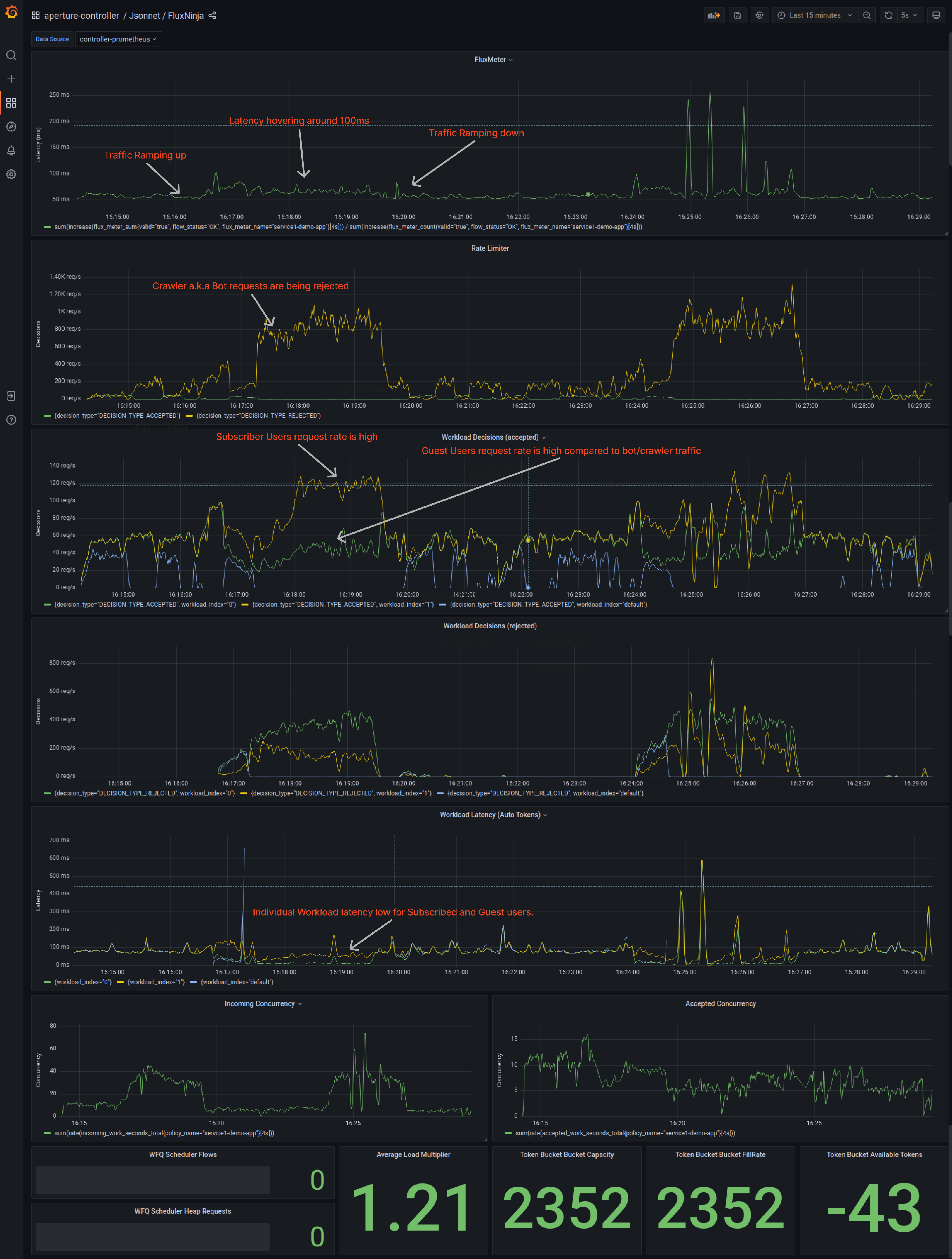

When Aperture is integrated with Nginx

Aperture is intelligent load management; it doesn’t just act on one signal, but rather the overall performance of the system observing golden signals. Once we get Aperture in the picture, system performance significantly increases, and service latency hovers around 150ms.

Rate Limiting is limiting all the crawler traffic based on label key as

configured in the policy. We can see how well the system is prioritizing the

workload for each user type, i.e., subscribed and guest.

Latency for individual workloads is significantly dropped from ~ 400ms to 200ms.

System Overview

The impact of Aperture on reliability can be better understood by examining the graph below. Before integrating Aperture, the latency peak was around 400ms. However, after integrating Aperture, the latency significantly decreased to less than 150ms, which is a remarkable difference. This is particularly significant for organizations that optimize to reduce latency by just 10ms.

This example clearly demonstrates how Aperture's techniques have improved the performance and reliability of the e-commerce website. By implementing Aperture's load management strategy, the service was able to handle high-traffic loads and prevent any downtime effectively.

Conclusion

In conclusion, managing high-traffic e-commerce websites with Nginx can be a daunting task, but integrating FluxNinja Aperture can make it easier. We have discussed the benefits of using Aperture to manage load and prevent server crashes, as well as the various techniques that Aperture offers to help manage high-traffic loads. By implementing Aperture's techniques, websites can handle high-traffic loads, prevent downtime, and ensure a consistent and reliable user experience even during peak periods. With Aperture, load management becomes more efficient, allowing websites to focus on providing high-quality service to their customers.

We invite you to sign up for Aperture Cloud, offering Aperture as a service with robust traffic analytics, alerts, and policy management. Learn more about using Aperture open-source for reliability automation by visiting our GitHub repository and documentation site. Lastly, we welcome you to join our vibrant Discord community to discuss best practices, ask questions, and engage in insightful discussions with like-minded individuals.