Have you ever tried to buy a ticket online for a concert and had to wait or refresh the page every three seconds when an unexpected error appeared? Or have you ever tried to purchase something during Black Friday and experienced moments of anxiety because the loader just kept on… well… loading, and nothing appeared? We all know it, and we all get frustrated when errors occur and we don't know what's wrong with the website and why we can't buy the ticket we want.

Websites undergo periods of high demand all the time, and these periods affect the site's performance, the owners' revenues (because they obviously want to sell us things), and our experiences as users. Sure, understanding the situation is valuable, but more crucial is knowing how to manage it. We can take some proactive steps to make sure everything's running at its best and deliver the service to as many people as possible. It's like web-based utilitarianism; let's maximize user satisfaction while mitigating any adverse outcomes.

What Exactly is High Demand?

High demand is when there’s a significant increase in user traffic, often exceeding the normal capacity of a website or online service. Website owners provision enough capacity, so a certain number of users can use it simultaneously. But sometimes, there is a popular marketing campaign, a viral social media post, or a seasonal event like Black Friday that makes users rush to visit the site. When high demand strikes, the server-side infrastructure faces some challenges that can hinder performance and user experience.

There can be various causes for poor site performance. When websites get busy, they don't work as smoothly as they should. This can really frustrate users to the point they leave. Fewer users lead to a loss of revenues and reputation. Research indicates that the first 20 seconds on a website are critical, as people typically leave within this time frame if they can't find the content they're after.

Reasons for poor site performance:

- Increased traffic volume during periods of high-demand. This sudden influx can strain the servers, causing slower response times (this is when the loader keeps on rolling), delays in content delivery, and even website crashes.

- Retry storm: Sometimes, when the request is not admitted, some systems automatically retry failed requests, which can create a vicious cycle of repeated attempts called a retry storm, further overwhelming the infrastructure and potentially worsening the performance.

- Internal service deploys: Sometimes, updates implemented behind the scenes can cause performance hiccups and a reduction in capacity. For users, this may result in the service running slowly due to its under-provisioning.

- High demand also impacts database performance. Frequent read-and-write operations from a large number of users can cause database congestion, resulting in slower query response times. For example, if a user adds a product to their cart and the database experiences decreased performance, it may struggle to retrieve inventory data, leading to a crash.

Handling High Demands

At FluxNinja, we understand how tricky it can be for users as well as the system when there's a huge demand, and we've got some strategies to handle these busy times.

Adaptive service protection is one way to ensure efficiency and a smooth user experience. In web infrastructure, it operates like a gatekeeper who determines which requests to admit and which to drop. When the infrastructure approaches maximum capacity, our agents might decide to temporarily deny entry, warding off potential overloads. This gatekeeper can have users wait—some longer than others—and can also discard less important requests. The priority of requests is determined based on the infrastructure's needs and the business goals.

There are additional techniques such as concurrency limiting and rate limiting to manage a steady user flow. If we link this to a restaurant scenario, concurrency limiting would work like the doorman permitting a specific number of guests to enter per hour that makes it manageable for a restaurant. If the doorman recognizes a VIP in the crowd and there's only one table left, he can choose to admit the VIP. Much like a server rejecting incoming requests to prevent system overloads, the doorman may temporarily deny entry once the restaurant reaches its full capacity.

Rate limiting, on the other hand, is all about setting boundaries. Let's imagine an amusement park: everyone can enter, even when it's busy, but visitors are limited to two flat rides and one roller coaster ride per hour. Once they've enjoyed the attractions, they cannot use them again until the limit resets.

When things get busy, it may be useful to implement graceful degradation, meaning to put some of the less crucial features on the back burner for a bit. Our team at FluxNinja has developed a tool that allows resource adjustments based on the settings given by the site owner. This way, we make sure that the critical resources get prioritized and keep working smoothly, even if we have to give some of the less essential features a little time-out.

If there are users who are already signed in, they've bought products before, and right now, they've got items in their cart, we'd make sure they get first dibs to finish their purchase. On the other hand, a user who has just landed on the website to browse for products might find themselves lower on the priority list. But every user matters: that's why FluxNinja employs a weighted fair queuing scheduler that reviews all requests and occasionally admits even the less critical ones.

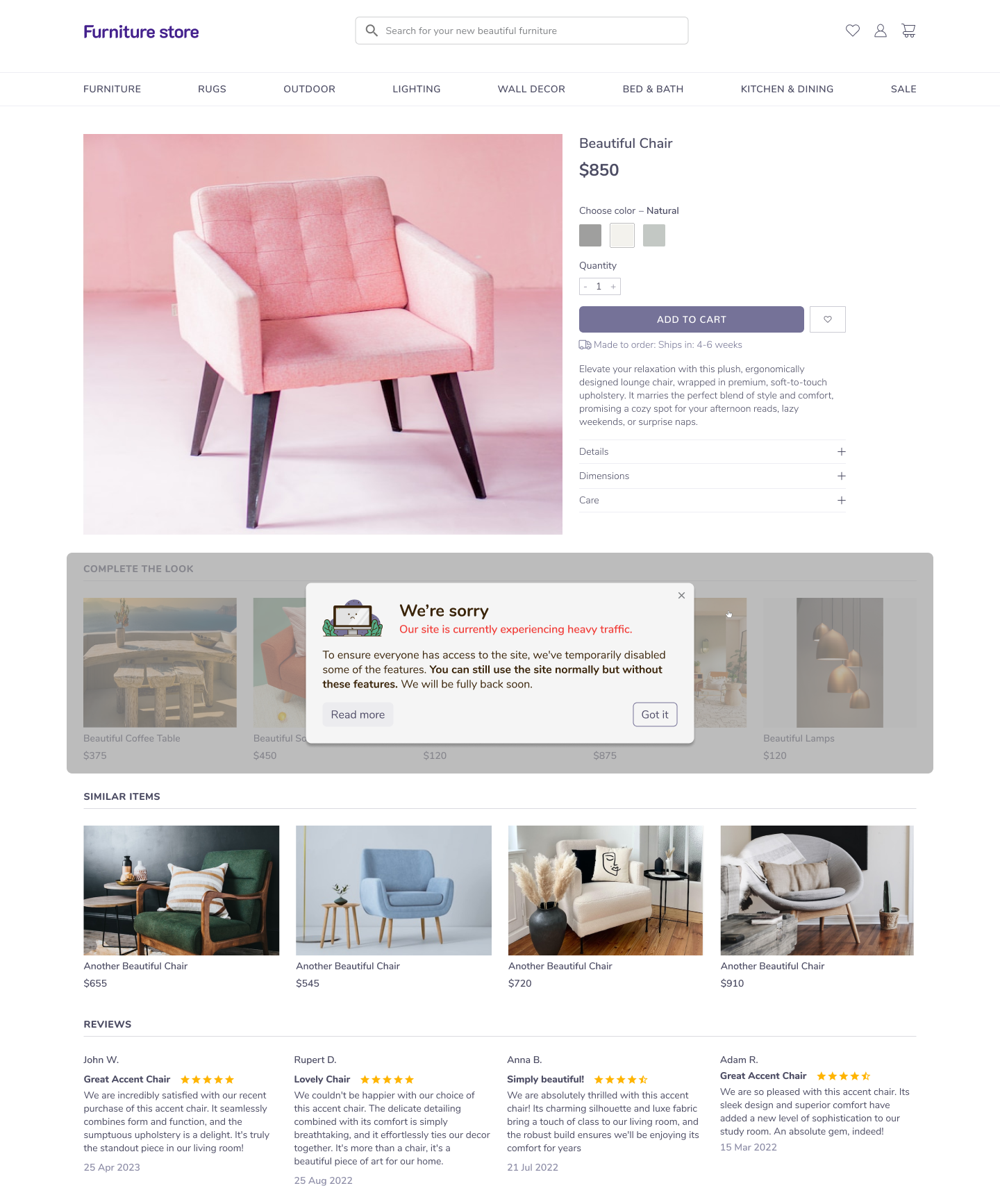

When it comes to limiting access to non-essential features, imagine a user casually browsing the product page. They might encounter a greyed-out "Similar items you might like" section, accompanied by a message letting them know about the current high traffic situation to prevent any frustration. Similar to the restaurant doorman example where guests are informed about potential wait times or limitations, FluxNinja aims to provide users with important information.

ℹ️ Image: When a user hovers over the 'Complete the Look' section, the section automatically stops on a snapshot, and a pop-up appears to provide an explanation about what is happening.

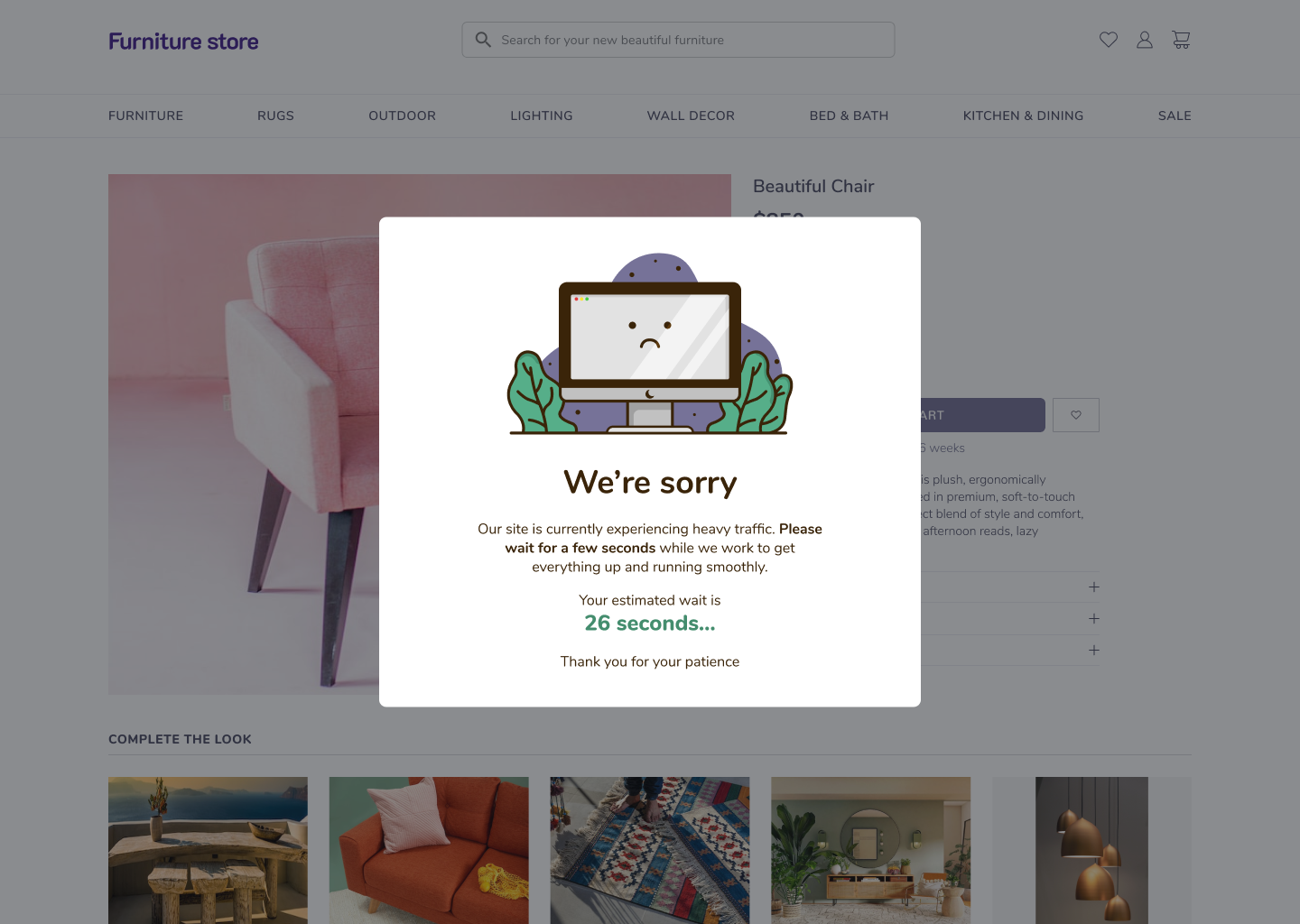

When things get busy, FluxNinja uses a queuing system to handle all the requests coming in. This means users might have to wait a bit before they can get to see what they want, but at least they won't just hit an error message. And we also try to keep things clear by showing estimated wait times. We also use some queuing tricks to keep the wait times as short as possible.

ℹ️ Image: During times when the infrastructure is overloaded, FluxNinja can display information about the situation and create a queue to prevent crashes. In this way, users are kept informed about what is happening. This acknowledgment significantly reduces the chance of users becoming frustrated.

At FluxNinja, we understand that being left in the dark during high-demand periods can be a bummer. Well, it only takes a few seconds of frustration before a future customer might bid farewell to a site for good. That's why if things are a bit slower than usual, we'll keep users in the loop with regular updates about what's up, what limitations might be in place for a bit, and how we're working to get everything up and running nicely again. We've got systems in place to spot issues that are happening, or even the ones that only loom on the horizon. That way, we either prevent them from happening or fix them up with minimal impact. By keeping a close eye on important performance metrics and having automatic alerts, we can quickly pinpoint and sort out these kinds of problems. We do all we can to make sure users keep having a top-notch experience, no matter what.

Online services have become a huge part of our lives and as long as we're able to use them without any significant disruptions, we're indifferent to the stress that might be put on the system supporting them. The modern web taught us that we get everything fast and that we are one click away from the dreamed-up product or service. When this one-click-away turns to minutes or – worse – to some 404 oops-page-not-found we lose trust and go away to another site that can give us what we want in the time frame we are used to. That's why it's so important to get ahead of the game. From auto-scaling and load scheduling, request prioritization, and per-user rate limits, FluxNinja's got high demand handled. By rolling out these tactics, FluxNinja's mission is to cut down on wait times, boost the uptime, and provide a reliable, smooth experience for users, even when it's rush hour on the web.